COMPUTEX Taipei, 6th June 2018 - GIGABYTE, an industry leader in server systems and motherboards, has collaborated with local cloud and storage platform providers to showcase an integrated AI / Data Science Cloud at COMPUTEX 2018, demonstrating how customers can build a private cloud to own & protect their data while connecting with public cloud services, and incorporate inbuilt AI capabilities to use Big Data for real-time deep learning and inference processing (AIoT).

As part of a daily feature series published during the show, today we will take a closer look deeper into the platform architecture and features the AI / Data Science Cloud that we are demonstrating at our booth, including how AI / ML capabilities are integrated into this cloud.

GIGABYTE’s AI / Data Science Cloud Demonstration Overview

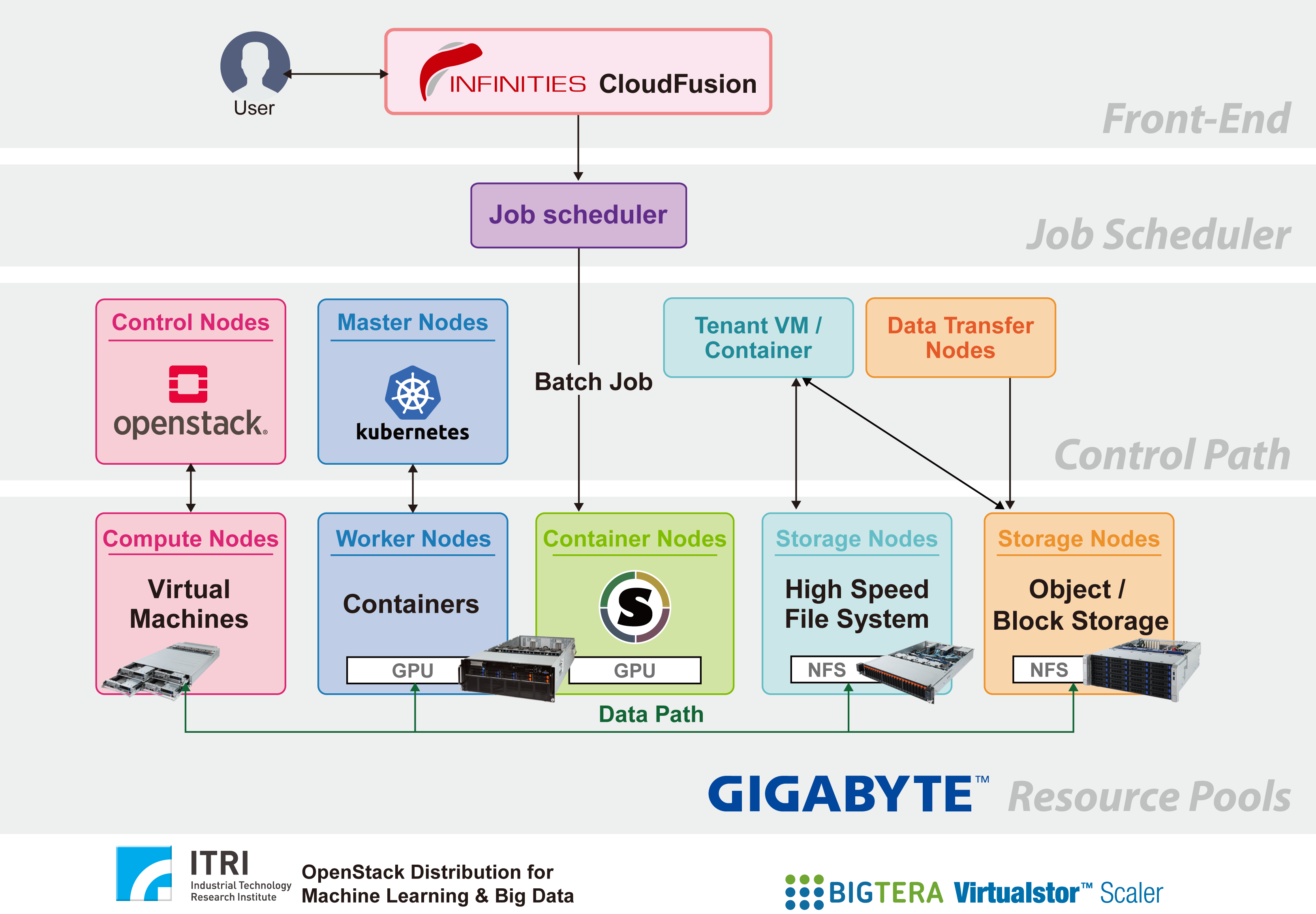

The architecture of this cloud consists of three main layers:

1. Management platform layer (front-end): provided by InfinitiesSoft CloudFusion

2. Virtualization layer (control nodes & resource pools): created with IOD-MB (ITRI OpenStack Distribution for Machine Learning & Big Data) from ITRI, and integrated with Bigtera VirtualStor Scaler storage

3. Hardware layer: GIGABYTE’s H-Series, G-Series and S-Series servers

Diagram A: AI / Data Science Cloud Platform Overview

ITRI’s OpenStack Distribution

ITRI (The Industrial Technology Research Institute of Taiwan) is a nonprofit R&D organization engaging in applied research and technical services. Founded in 1973, ITRI has played a vital role in transforming Taiwan's economy from a labor-intensive industry to a high-tech industry.

GIGABYTE’s AI / Data Science Cloud has been created using ITRI’s IOD-MB, a full-featured IaaS solution integrating Openstack and Kubenetes for simultaneous VM and container orchestration, pioneering platform for ML (machine learning) / DNN (Deep Neural Networks) and Big Data applications.

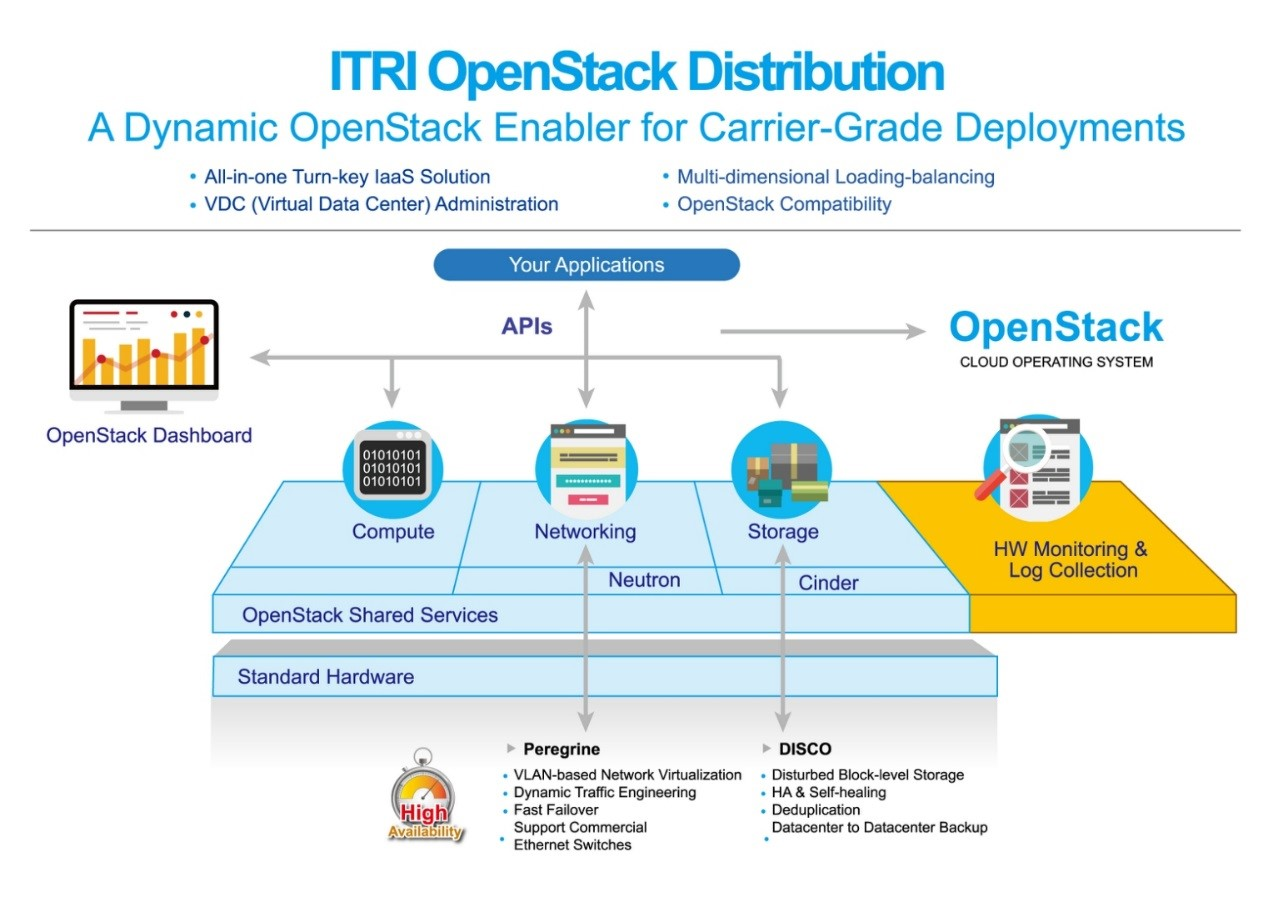

IOD-MB is based on IOD (ITRI’s OpenStack Distribution), an all-in-one large-scale cloud data center management system to meet the needs of organizations wanting to provide IaaS solutions. IOD brings together ITRI's valuable experience in the development of cloud OS over the past six years and the advantages of OpenStack's open software architecture. It provides users with a low-cost, high flexibility solution. ITRI IOD can help deploy IaaS solutions without needing to spend time and resources on integration and testing; instead your organization can focus on the needs of your users or customers for IaaS, PaaS, and SaaS.

Diagram B: ITRI IOD Platform Features

IOD includes the following enhancements:

Peregrine: a Neutron plugin that supports network virtualization.

DISCO: a Cinder Plugin for software defined storage

PDCM (Physical Data Center Management): a full stack monitoring and alert solution

Security Additions: “deep” defensive network security for deployed infrastructure across OSI layers

Server Priming Solution: ZETSPRI (ZEro Touch Server PRovisioning from ITRI), a “priming” tool for servers before being used in IaaS for any purpose - compute, networking or storage

IOD-MB: ITRI’s OpenStack with Machine Learning and Big Data Capabilities

IOD-MB (ITRI’s OpenStack Distribution for Machine Learning & Big Data) is an enhanced version of IOD integrated with InfinitiesSoft CloudFusion and BigTera VirtualStore Scaler, integrating Big Data analysis and ML / DNN capabilities.

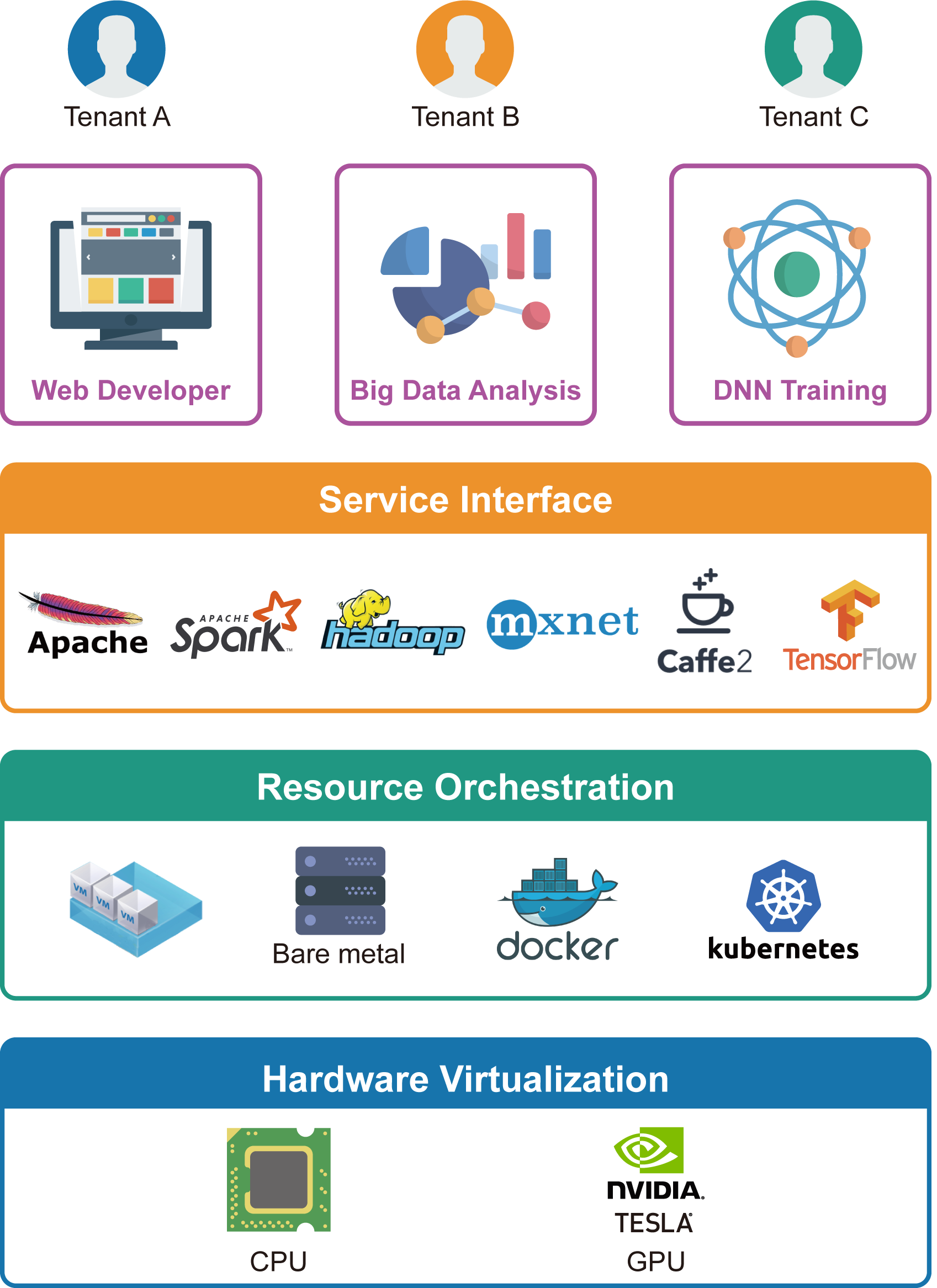

Diagram C: AI / Data Science Cloud Platform Capabilities

IOD-MB incorporates the following features:

Kubernetes Integration: for the automatic policy-based deployment of VMs and containers onto any compute node through the management of CloudFusion.

Kubernetes Master Node Clustering: to provide full HA and load balancing capability for Kubernetes.

Tenant Based Isolation: OpenStack has an inherent architectural concept of a tenant which is completely missing from Kubernetes. This has two detrimental consequences:

• Security: the constituent containers or VMs for a tenant are not isolated from one another network-wise (they can ping one another across tenat boundaries).

• Network Efficiency: to compensate for the above, subnets of different tenants can communicate securely through routers (layer 3) and firewall settings, which nonetheless incur a cost of scalability and network latency & overhead.

To remedy this issue, IOD-MB extends the layer 2 mechanism (VLAN) for tenant isolation to both containers and VMs across the realm of tenants under the joint management infrastructure of OpenStack and Kubernetes. That is, member containers and VMs within one tenant are free to communicate with one another while being isolated from those of another tenant. Network security is assured without incurring networking overhead and scalability.

NFS to Object Storage Gateway: to ease the migration of legacy software based on the NFS semantics/syntax by adopting any state-of-art object storage system (such as Bigtera VirtualStor).

Automatic Deployment of DNN Development Environment: IOD-MB automates the deployment of a DNN (Deep Neural Network) development environment (such as Tensorflow, Tensorboard, Caffe, Jupyter, NVIDIA DIGITS, etc.) in the form of containers onto GPU enabled servers such as GIGABYTE’s G481-S80.

User Authentication & Authorization of DNN IDEs (Integrated Development Environment): unlike traditional HPC, the nature of DNN development/training is interactive. Hence, for the security and integrity of the system, IOD-MB provides mandatory authentication and authorization for a user coming from the Internet to initiate an interactive session with the likes of Jupyter or Tensorflow, which is missing from the original package distribution.

Integration with HPC Job Schedulers: unlike VMs and generic containers, containers for DNN training occupy GPU-enabled servers for acceleration and quicker iterations. Even so, each submitted job may take days or weeks for completing one iteration. As a result, compute/GPU resources are scarce and hence the traditional job schedulers like SLURM or Univa Grid Engine will have to come into picture for resource (storage and GPUs) management, coordination and scheduling. IOD-MB also features this capability.

Extended Monitoring of Containers and GPU Resources: as the viability and status of scarce resources and additional virtual units (containers) have to be closely watched and managed, it is natural to expand the realm and coverage of PDCM to both Kubernetes and the GPU-enabled servers for best utilization and maximal availability.

Use Case Scenario

Problem

AI/ ML data engineers & scientists usually need to spend more than a month building and testing the infrastructure, software stack, and the framework environment required for a machine learning platform. Even when the set up is complete, they still need to spend 25% of their time each day on system maintenance, adjustment and deployment scheduling.

Solution

InfinitiesSoft CloudFusion integrates ITRI’s IOD-MB, Kubernetes and Bigtera VirtualStor Scaler into a single platform, featuring a dual user-oriented / administrator-oriented portal with GPU support and automation of AI processes, reducing complexity and the learning curve for users to adopt Tensorflow, Caffe and a variety of other tools that focus on deep learning. The user doesn't need to spend time on system maintenance, adjustment and deployment scheduling and instead can focus on AI / ML tasks.

For more information on building a private cloud with AI / ML capabilities, please visit GIGABYTE’s booth during COMPUTEX 2018 to talk to a GIGABYTE or ITRI professional, or contact either company for more information.

Date & Time:

Tuesday 5th June to Friday 8th June 9.30am ~ 6.00pm, Saturday 9th June 9.30am ~ 4.00pm

Location:

Taipei World Trade Center Hall 1, Booth D0002

{{ item.Title }}

{{ item.Desc }}