Leadership Performance, Cost Efficient, Fully Open-Source

AMD Instinct™ MI350 Series

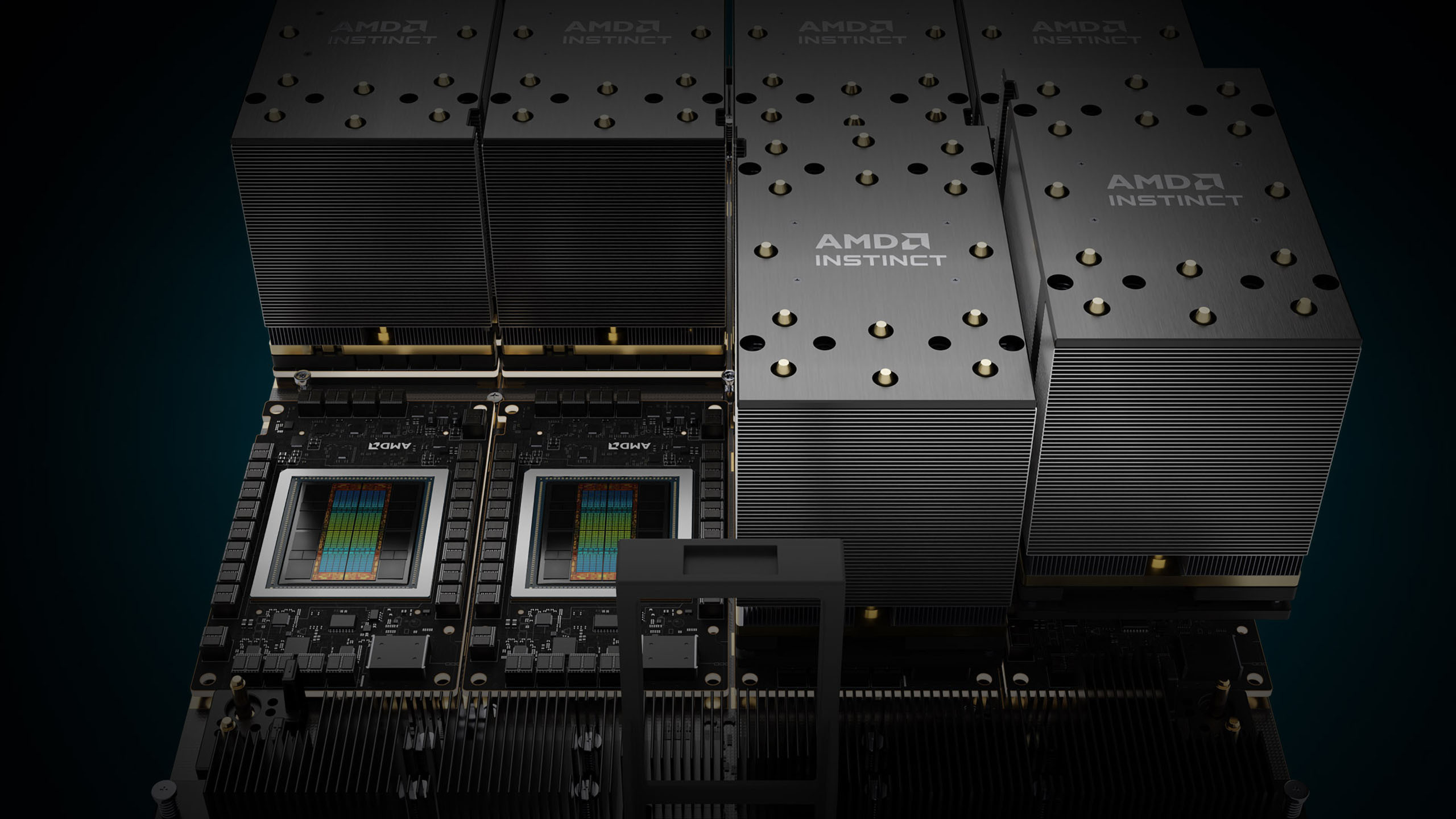

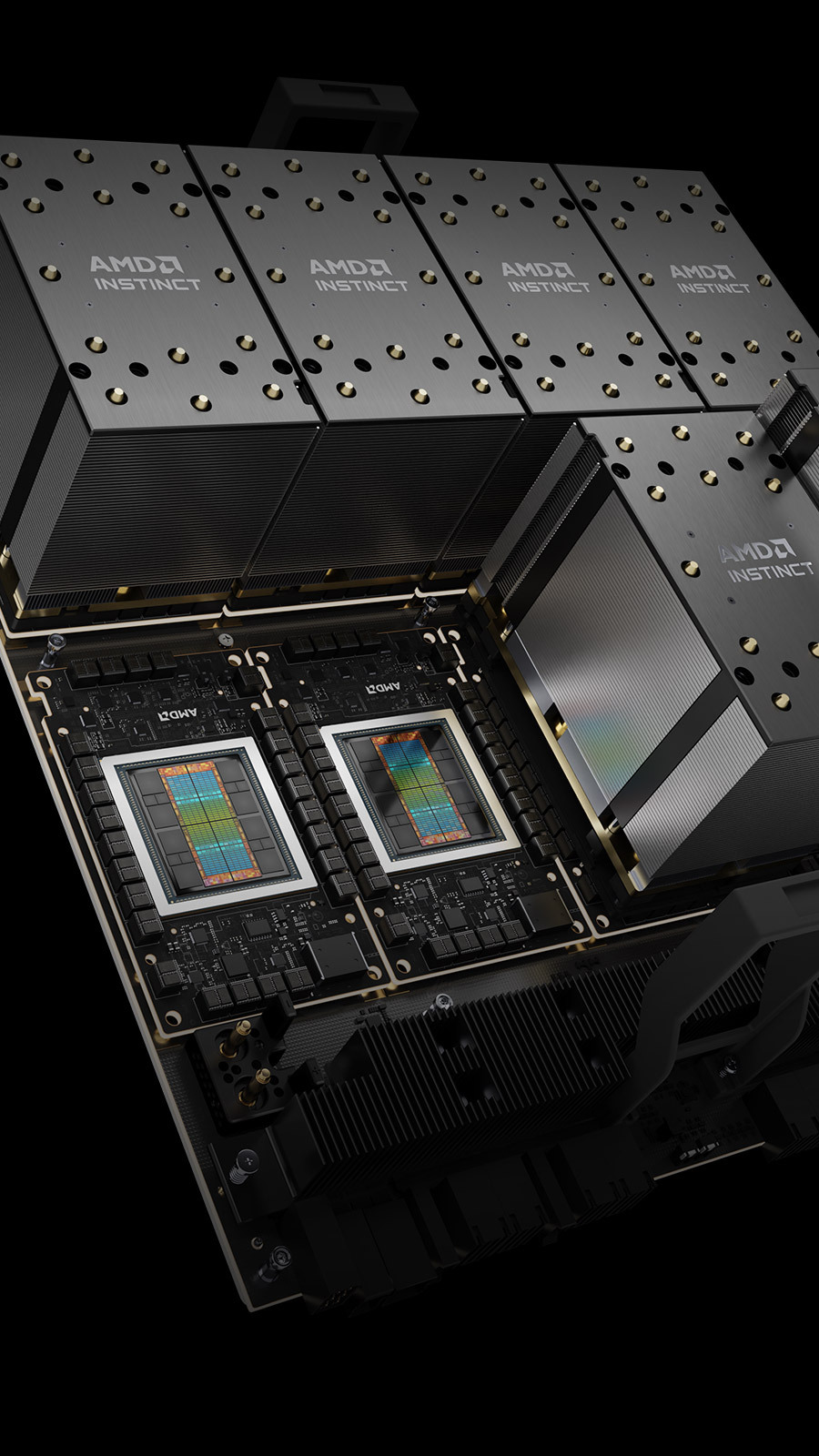

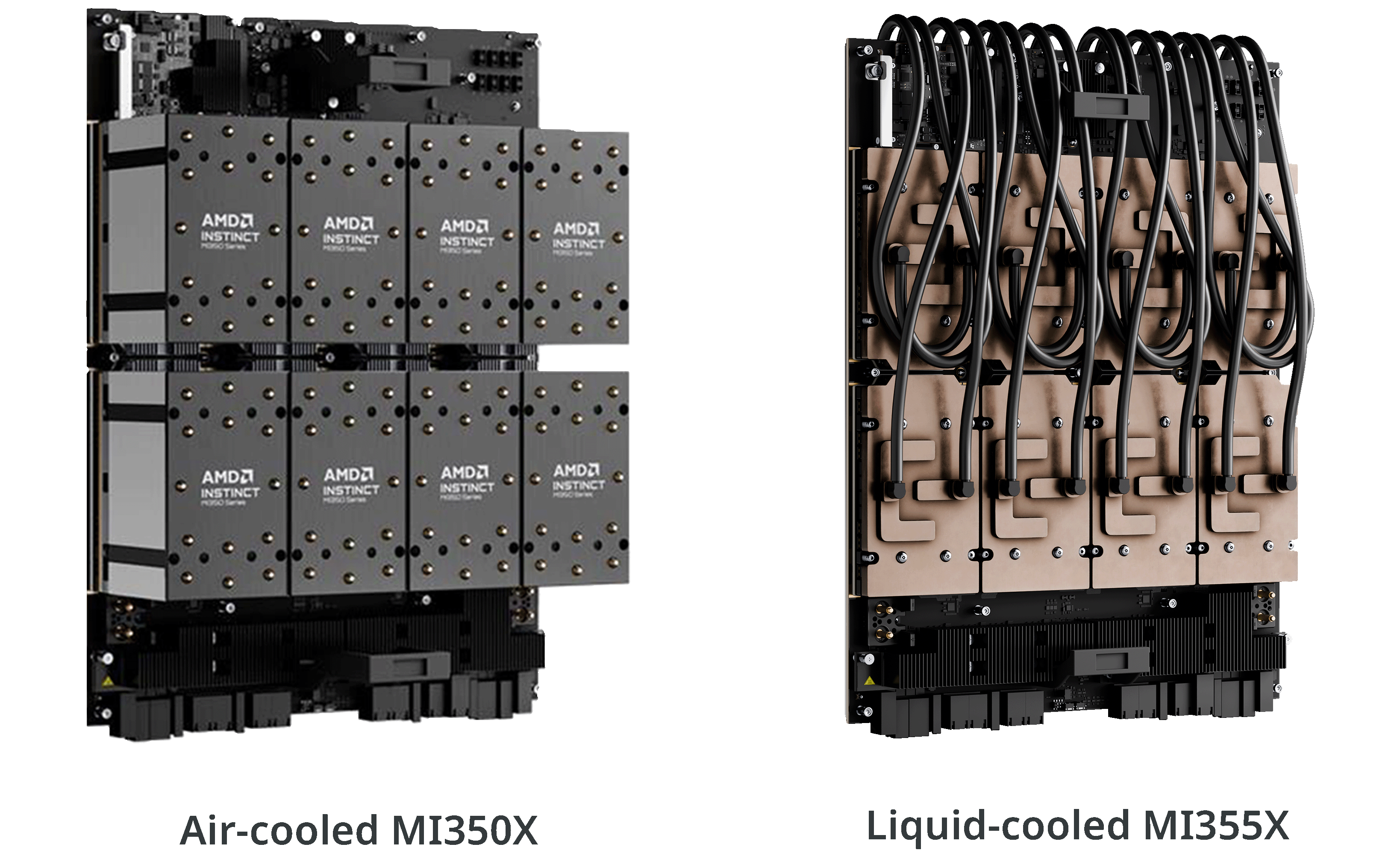

The AMD Instinct™ MI350 Series GPUs, launched in June 2025, represent a significant leap forward in data center computing, designed to accelerate generative AI and high-performance computing (HPC) workloads. Built on the cutting-edge 4th Gen AMD CDNA™ architecture and fabricated using TSMC's 3nm process, these GPUs deliver exceptional performance and energy efficiency for training massive AI models, high-speed inference, and complex HPC tasks like scientific simulations and data processing. Featuring 288GB of HBM3E memory and up to 8TB/s bandwidth, the MI350X and MI355X GPUs offer up to 4x generational AI compute improvement and a remarkable 35x boost in inference performance, positioning them as formidable competitors in the AI and HPC markets.

AMD Instinct | |||

|---|---|---|---|

MI355X GPU | MI350X GPU | Model | MI325X GPU |

| TSMC N3P / TSMC N6 | Process Technology (XCD / IOD) | TSMC N5 / TSMC N6 | |

| AMD CDNA4 | GPU Architecture | AMD CDNA3 | |

| 256 | GPU Compute Units | 304 | |

| 16,384 | Stream Processors | 19,456 | |

| 185 Billion | Transistor Count | 153 Billion | |

| 10.1 PFLOPS | 9.2 PFLOPS | MXFP4 / MXFP6 | N/A |

| 5.0 / 10.1 POPS | 4.6 / 9.2 POPS | INT8 / INT8 (Sparsity) | 2.6 / 5.2 POPS |

| 78.6 TFLOPS | 72.1 TFLOPS | FP64 (Vector) | 81.7 TFLOPS |

| 5.0 / 10.1 PFLOPS | 4.6 / 9.2 PFLOPS | FP8 / OCP-FP8 (Sparsity) | 2.6 / 5.2 PFLOPS |

| 2.5 / 5.0 PFLOPS | 2.3 / 4.6 PFLOPS | BF16 / BF16 (Sparsity) | 1.3 / 2.6 PFLOPS |

| 288 GB HBM3E | Dedicated Memory Size | 256 GB HBM3E | |

| 8 TB/s | Memory Bandwidth | 6 TB/s | |

| PCIe Gen5 x16 | Bus Interface | PCIe Gen5 x16 | |

Passive & Liquid | Passive | Cooling | Passive & Liquid |

| 1400W | 1000W | Maximum TDP/TBP | 1000W |

| Up to 8 partitions | Virtualization Support | Up to 8 partitions | |

AMD Instinct™ MI300 Series

OverviewSpecifications

Accelerators for the Exascale Era

- Designed for the most demanding workloads, the AMD Instinct MI325X GPU delivers 256GB of memory and 6 TB/s bandwidth, combining exceptional performance with enhanced power efficiency and support for matrix sparsity to optimize AI training and inference.

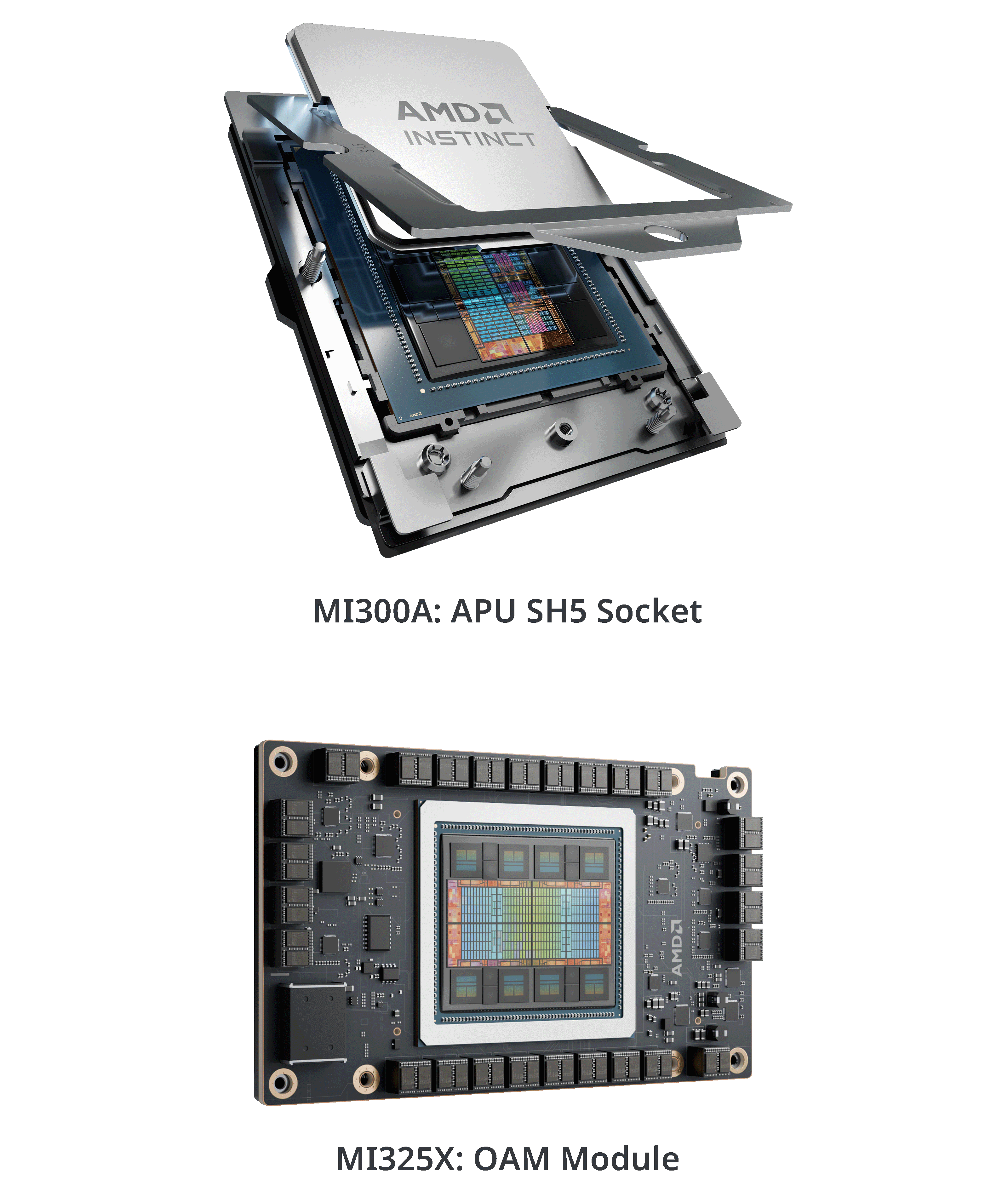

- The world's first unified data center APU, AMD Instinct MI300A, breaks through performance bottlenecks between CPU and GPU, eliminating programming overhead and simplifying data management.

- Powered by AMD EPYC™ processors and AMD Instinct™ GPUs and APUs, the world’s fastest supercomputers, El Capitan and Frontier, demonstrate outstanding performance and energy efficiency on both the TOP500 and GREEN500 lists, proving AMD's leadership in HPC and AI acceleration.

GIGABYTE delivers advanced servers built for the Exascale era, featuring the AMD Instinct™ MI325X and MI300X GPUs as Open Accelerator Modules (OAMs) on a universal baseboard (UBB) inside GIGABYTE G-series servers. The AMD Instinct™ MI300A APU, which integrates CPU and GPU into a single package, is available in the GIGABYTE G383 series with a four-LGA-socket configuration. Together, these systems provide exceptional compute density, scalability, and cooling efficiency, empowering enterprises and research institutions to drive innovation in AI and HPC with confidence.

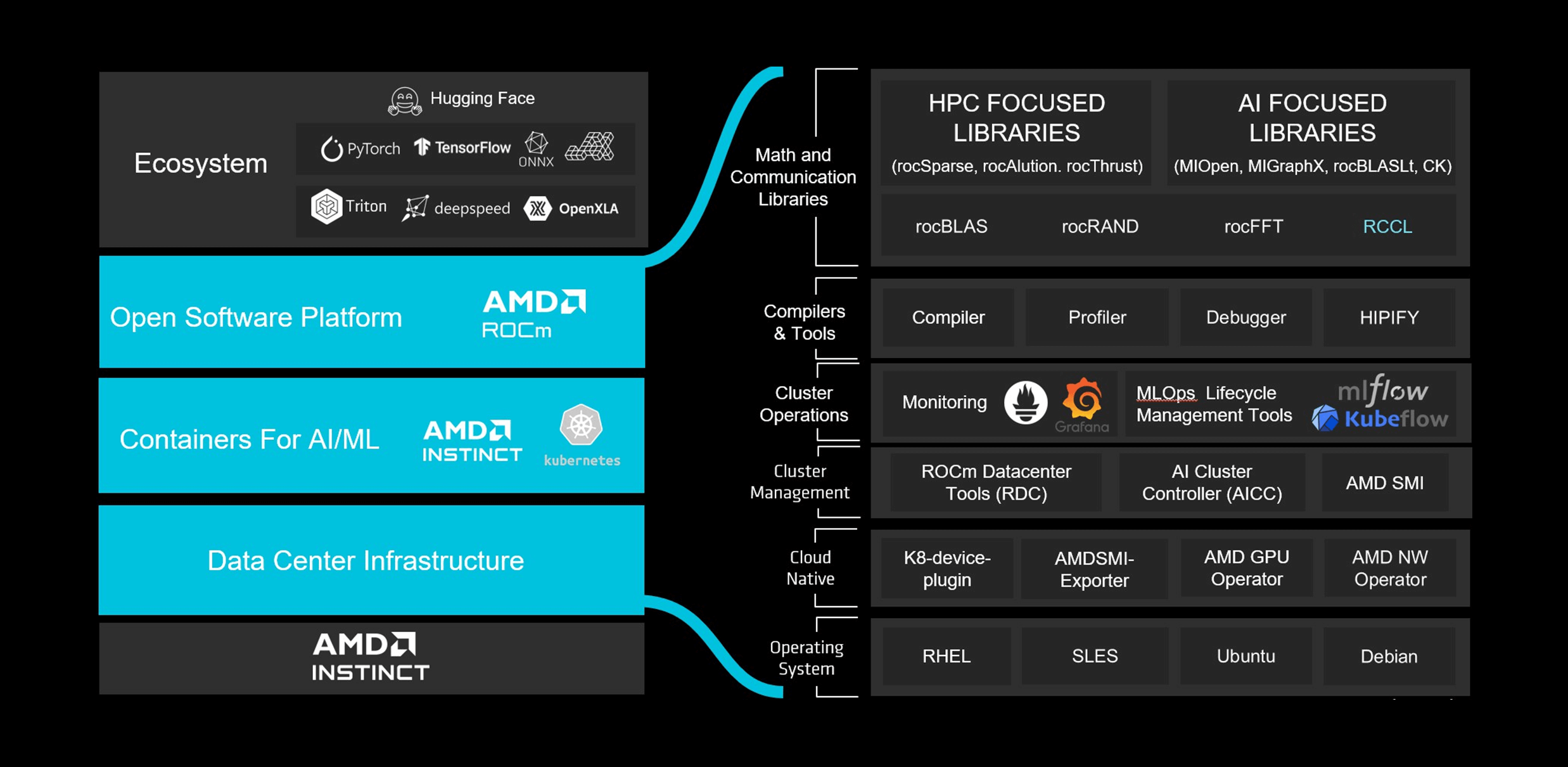

Optimize Next Gen Innovation with AMD ROCm™ 7.0

| The AMD ROCm™ 7.0 software stack is a key differentiator that enables high-performance AI and HPC development with minimal code changes. AMD Instinct™ MI350 Series GPUs are fully optimized for leading frameworks such as PyTorch, TensorFlow, JAX, ONNX Runtime, Triton, and vLLM, and offer Day 0 support for popular models through automatic kernel generation and continuous validation. | |

|---|---|

|

|

| [1] (MI300-080): Testing by AMD as of May 15, 2025, measuring the inference performance in tokens per second (TPS) of AMD ROCm 6.x software, vLLM 0.3.3 vs. AMD ROCm 7.0 preview version SW, vLLM 0.8.5 on a system with (8) AMD Instinct MI300X GPUs running Llama 3.1-70B (TP2), Qwen 72B (TP2), and Deepseek-R1 (FP16) models with batch sizes of 1-256 and sequence lengths of 128-204. Stated performance uplift is expressed as the average TPS over the (3) LLMs tested. Results may vary. | |

Select GIGABYTE for the AMD Instinct™ Platform

Compute Density

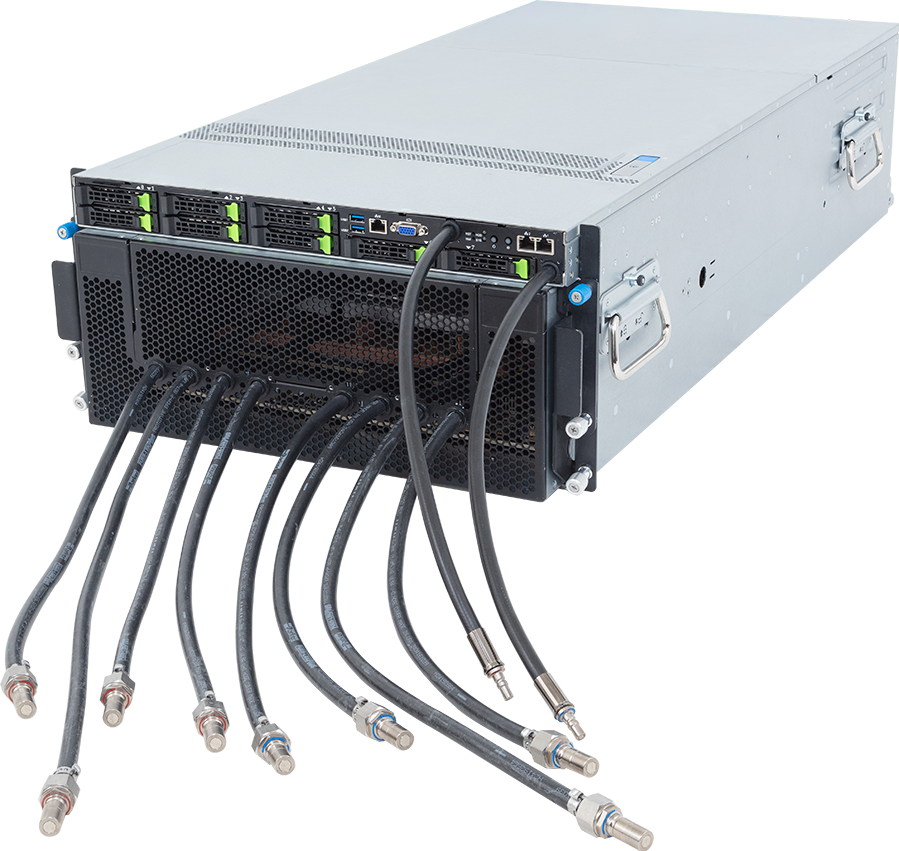

Offering industry-leading compute-density servers in the 8U air-cooled G893 series and 4U liquid-cooled G4L3 series, the servers deliver greater performance per rack.

High Performance

The custom 8-GPU UBB-based servers ensure stable, peak performance from both CPUs and GPUs, with priority given to signal integrity and cooling.

Scale-out

Multiple expansion slots are available to be populated with Ethernet or InfiniBand NICs for high-speed communication between connected nodes.

Advanced Cooling

With the availability of server models using direct liquid cooling (DLC), CPUs and GPUs can dissipate heat faster and more efficiently with liquid cooling than with air.

Energy Efficiency

Real-time power management, automatic fan speed control, redundant Titanium PSUs, and the DLC option ensure optimal cooling and power efficiency.