Accelerators are Here to Stay

What shifts are taking place in data centers?

A dedicated powerful, parallel-processing accelerator is a necessity Exascale computing is a reality due to scalability of systems An open software platform leads the way into exascale and supercomputing systems with AMD ROCm 5.0 Data centers have a need for GPU PCIe cards and more powerful OAM form factor GPUs

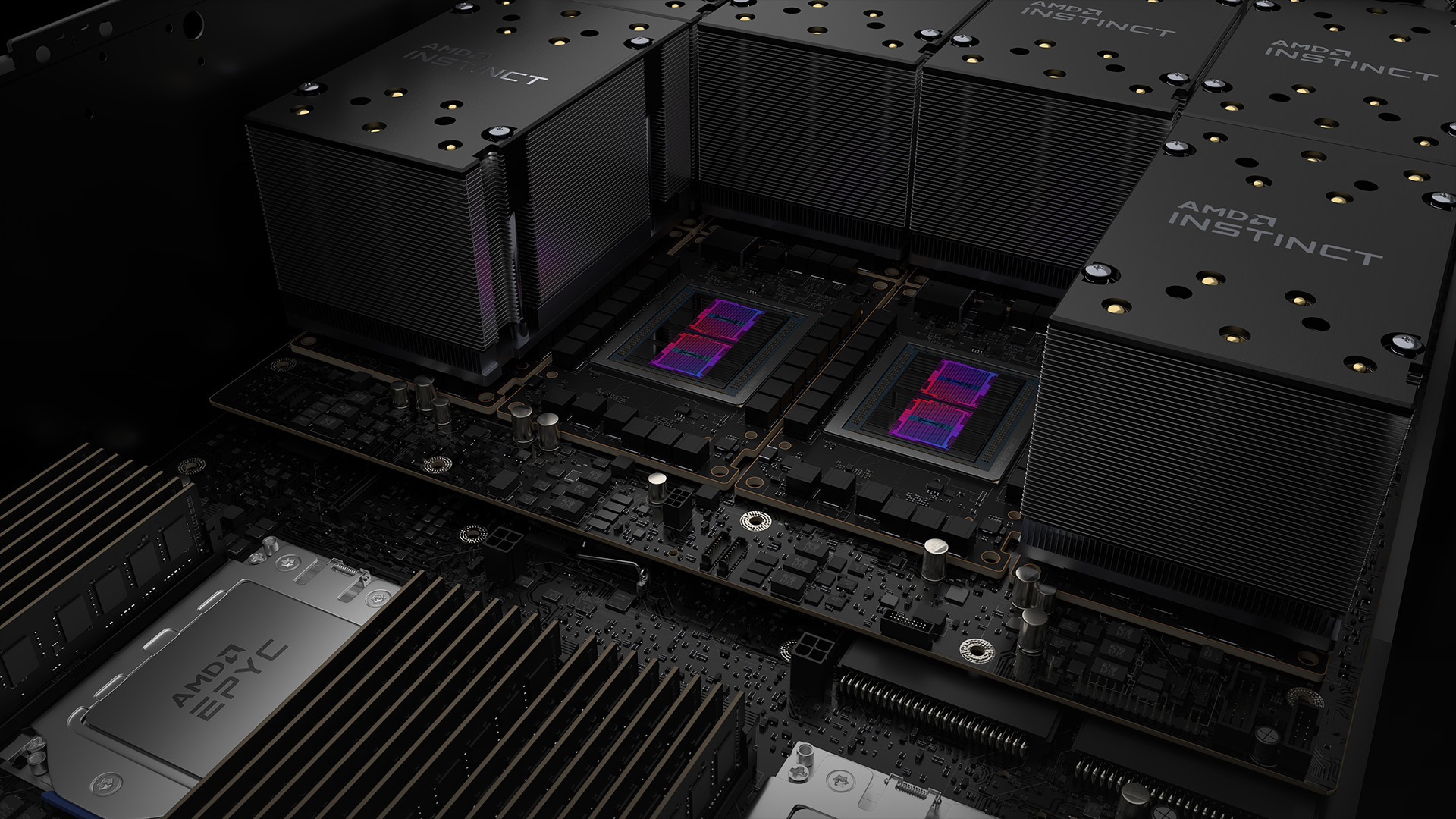

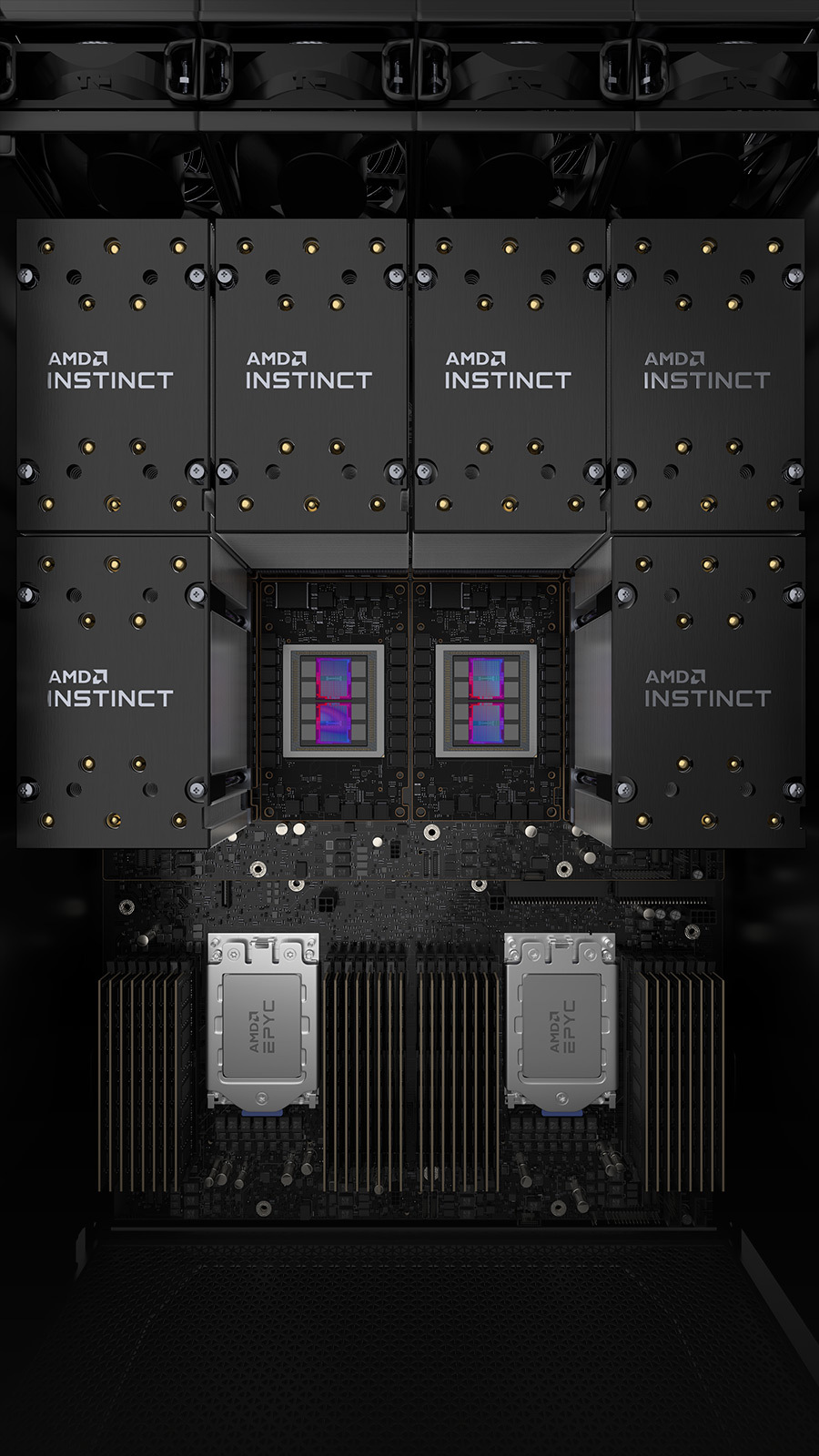

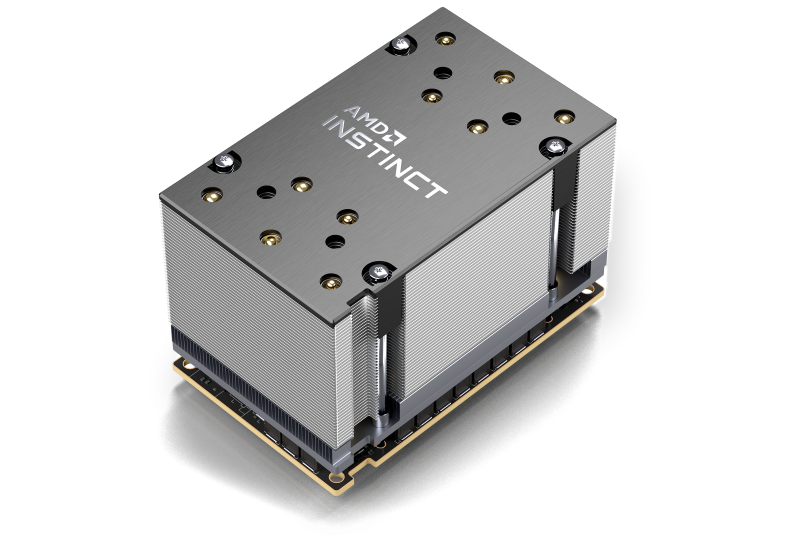

GIGABYTE has created and tailored passive cooling servers for the AMD Instinct™ MI250 (OAM) while expanding support and testing for servers with the MI210 (PCIe) offering. The new AMD Instinct MI200 series accelerator is designed for dense computing in our GIGABYTE 2U server. This means that the technology is not only able to scale for large computing clusters, but also to support smaller deployments such as a single HPC server.

Fields that Benefit from High Parallel Processing

AMD Instinct - Innovative Technology

AMD Innovations Pave the Way

In architecture, packaging and integration are pushing the boundaries of computing by unifying the most important processors in the data center, the CPU and the GPU accelerator. With Industry-first multi-chip GPU modules along

with 3rd Gen AMD Infinity Architecture, AMD is delivering performance, efficiency and overall system throughput for HPC and

AI using AMD EPYC™ CPUs and AMD Instinct™ MI200 series accelerators.

- AMD Instinct™ MI200 series accelerators powered by 2nd Gen AMD CDNA™ architecture, are built on an innovative multi- chip design to maximize throughput and power efficiency for the most demanding HPC and AI workloads

- With AMD CDNA™ 2, the MI250 has new Matrix Cores delivering up to 7.8X the peak theoretical FP64 performance vs. AMD previous Gen GPUs and offers the industry's best aggregate peak theoretical memory bandwidth at 3.2 terabytes per second.

- 3rd Gen AMD Infinity Fabric™ technology enables direct CPU to GPU connectivity extending cache coherency and allowing a quick and simple on-ramp for CPU codes to tap the power of accelerators.

- AMD Instinct MI250 accelerators with advanced GPU peer-to-peer I/O connectivity through eight AMD Infinity Fabric™ links deliver up to 800 GB/s of total aggregate theoretical bandwidth.

- The Frontier supercomputer, one of the first Exascale supercomputer, is the first to offer a unified compute architecture powered by AMD Infinity Platform™ based nodes.

Ecosystem without Borders

AMD ROCm™ is an open software platform allowing researchers to tap the power of AMD Instinct™ accelerators to drive scientific discoveries. The ROCm platform is built on the foundation of open portability, supporting environments across multiple accelerator vendors and architectures. With ROCm 5, AMD extends its open platform powering top HPC and AI applications with AMD Instinct™ MI200 Series accelerators, increasing accessibility of ROCm for developers and delivering leadership performance across key workloads.

About ROCm 5:

- ROCm™ - Open software platform for science used worldwide on leading exascale and supercomputing systems

- ROCm™ 5 extends the AMD open platform for HPC and AI with optimized compilers, libraries and runtimes support for MI200

- Open & Portable – ROCm™ open ecosystem supports heterogenous environments with multiple GPU vendors and architectures

- ROCm™ 5 library optimizations using new MI200 features: FP64 Matrix ops, reduced kernel launch overhead, Packed FP32 math and FP64 atomics support

- ROCm™ upstream support of key industry frameworks: TensorFlow, PyTorch and ONNX-RT7.AMD Infinity Hub providing researchers, data scientists and end-users a quick and easy way to find, download and install optimized containers for HPC apps and Machine Learning frameworks supported on AMD Instinct™ MI200series accelerators and ROCm™.

- AMD Infinity Hub: Ready-to-deploy software containers and guides for HPC, AI & Machine Learning.

Benefits to the MI200 Series Accelerator

High-performance

Scalability

Connections

Leadership

All AI Workloads

AMD Instinct™ MI250 Accelerator

AMD Instinct™ MI250 Accelerator

| Model | MI250 OAM |

|---|---|

| Compute Units | 208 CU |

| Stream Processors | 13,312 |

| Peak FP64/FP32 Matrix | 90.5 TF |

| Peak FP64/FP32 Vector | 45.3 TF |

| Peak FP16/BF16 | 362.1 TF |

| Memory Size | 128GB HBM2e |

| Memory Clock | 1.6GHz |

| Memory Bandwidth | Up to 3.2 TB/sec |

| Bus Interface | PCIe Gen4 |

| Infinity Fabric Links | Up to 6 |

| Max Power | 560W TDP |

AMD Instinct™ MI210 Accelerator

AMD Instinct™ MI210 Accelerator

| Model | MI210 PCIe |

|---|---|

| Compute Units | 104 CU |

| Stream Processors | 6,656 |

| Peak FP64/FP32 Matrix | 45.3 TF |

| Peak FP64/FP32 Vector | 22.6 TF |

| Peak FP16/BF16 | 181.0 TF |

| Memory Size | 64GB HBM2e |

| Memory Clock | 1.6GHz |

| Memory Bandwidth | Up to 1.6 TB/sec |

| Bus Interface | PCIe Gen4 |

| Infinity Fabric Links | Up to 3 |

| Max Power | 300W TDP |