Tech-Guide

CPU vs. GPU: Which Processor is Right for You?

Besides the central processing unit (CPU), the graphics processing unit (GPU) is also an important part of a high-performing server. Do you know how a GPU works and how it is different from a CPU? Do you know the best way to make them work together to deliver unrivalled processing power? GIGABYTE Technology, an industry leader in server solutions that support the most advanced processors, is pleased to present our latest Tech Guide. We will explain the differences between CPUs and GPUs; we will also introduce GIGABYTE products that will help you inject GPU computing into your server rooms and data centers.

The Definition of the CPU

The Origin of the GPU

What are the Key Differences between CPU and GPU?

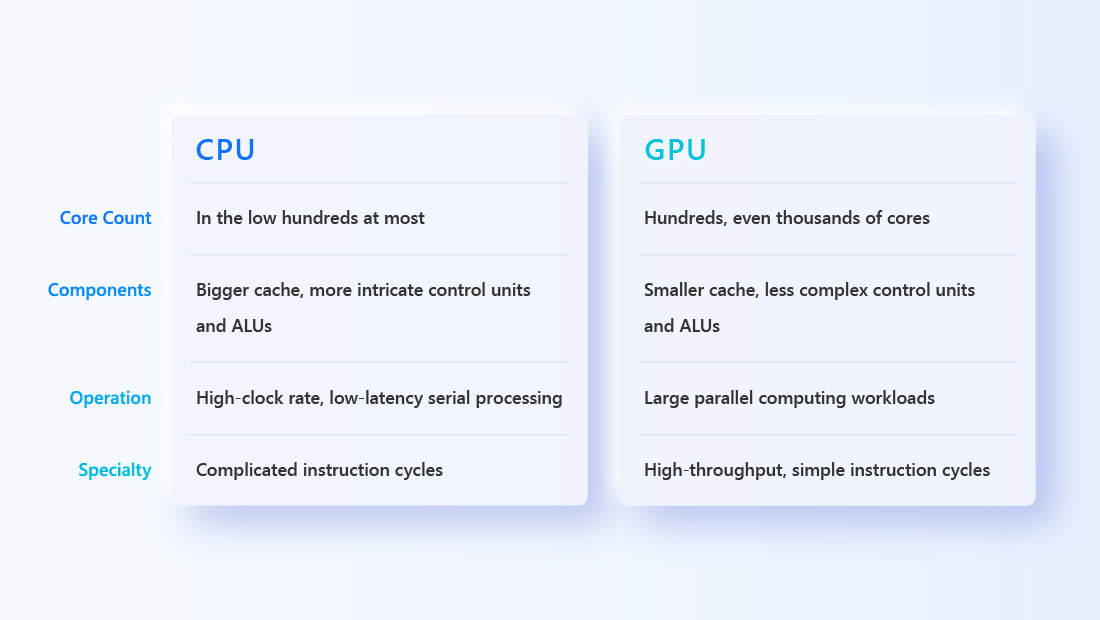

The components that make up CPUs and GPUs are analogous; they both comprise control units, arithmetic logic units (ALU), caches, and DRAM. The main difference is that GPUs have smaller, simpler control units, ALUs, and caches—and a lot of them. So while a CPU can handle any task, a GPU can complete certain specific tasks very quickly.

The table above is a handy comparison of CPUs and GPUs. Through heterogeneous computing, different computing tasks can be allocated to the most suitable processors. This will help to accelerate computing speed and make sure you squeeze every drop of performance out of your server.

How to Use Both CPUs and GPUs to Achieve Maximum Performance?

How to Inject GPU Computing into Your Server Solution?

By injecting GPU computing into your server solutions, you will benefit from better overall performance. GIGABYTE Technology offers a variety of server products that are the ideal platforms for utilizing advanced CPUs and GPUs. They are used in AI and big data applications in weather forecasting, energy exploration, scientific research, etc.

CERN: GPU Computing for Data Analysis

Waseda University: GPU Computing for Computer Simulations and Machine Learning

Cheng Kung University: GPU Computing for Artificial Intelligence

Big Data

Edge Computing

HPC

Data Center

Immersion Cooling

Machine Learning

Artificial Intelligence

GPU

Server

Parallel Computing

Computing Cluster

Heterogeneous Computing

PCIe

Rack Unit

GPGPU

Computer Vision

Core

Node

Natural Language Processing

FPGA

DPU

Computer Science

Data Storage

WE RECOMMEND

RELATED ARTICLES

AI & AIoT

How to Benefit from AI In the Healthcare & Medical Industry

If you work in healthcare and medicine, take some minutes to browse our in-depth analysis on how artificial intelligence has brought new opportunities to this sector, and what tools you can use to benefit from them. This article is part of GIGABYTE Technology’s ongoing “Power of AI” series, which examines the latest AI trends and elaborates on how industry leaders can come out on top of this invigorating paradigm shift.

AI & AIoT

10 Frequently Asked Questions about Artificial Intelligence

Artificial intelligence. The world is abuzz with its name, yet how much do you know about this exciting new trend that is reshaping our world and history? Fret not, friends; GIGABYTE Technology has got you covered. Here is what you need to know about the ins and outs of AI, presented in 10 bite-sized Q and A’s that are fast to read and easy to digest!

Tech Guide

How to Pick the Right Server for AI? Part One: CPU & GPU

With the advent of generative AI and other practical applications of artificial intelligence, the procurement of “AI servers” has become a priority for industries ranging from automotive to healthcare, and for academic and public institutions alike. In GIGABYTE Technology’s latest Tech Guide, we take you step by step through the eight key components of an AI server, starting with the two most important building blocks: CPU and GPU. Picking the right processors will jumpstart your supercomputing platform and expedite your AI-related computing workloads.

AI & AIoT

How to Benefit from AI in the Automotive & Transportation Industry

If you work in the automotive and transportation industry, spend a few minutes to read our in-depth analysis of how artificial intelligence has created new opportunities in this sector, and what tools you can use to get ahead. This article is part of GIGABYTE Technology’s ongoing “Power of AI” series, which examines the latest AI-related trends, and how intrepid visionaries can reap the benefits of this exciting paradigm shift.

Get the inside scoop on the latest tech trends, subscribe today!

Get Updates