A GPU Accelerated Era

Breaking Barriers in Accelerated Computing and Generative AI

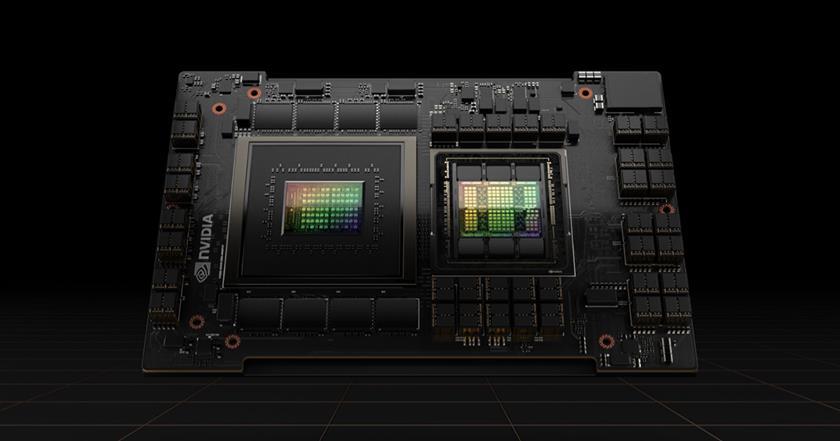

Blackwell-architecture GPUs

Blackwell-architecture GPUs

208 billion transistors with TSMC 4NP process

2nd Gen Transformer Engine

2nd Gen Transformer Engine

Doubling the performance with FP4 enablement

5th Gen NVLink & NVLink Switch

5th Gen NVLink & NVLink Switch

1.8 TB/s GPU-GPU interconnect

RAS Engine

RAS Engine

100% In-system self-test

Secure AI

Secure AI

Full performance encryption & TEE

Decompression Engine

Decompression Engine

800 GB/s

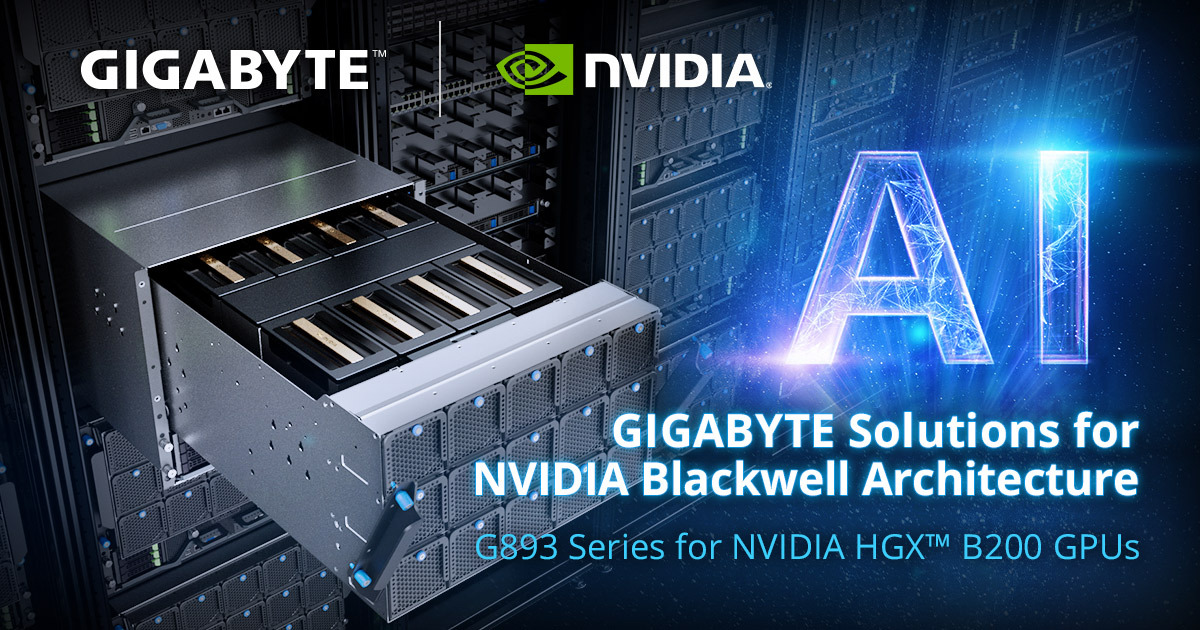

GIGABYTE's Commitment to Flexible and Scalable Solutions

Short TTM for Agile Deployment

Flexible Scalability for Diverse Scenarios

Comprehensive One-Stop Service for Optimized Configuration

GIGAPOD Deployment Process

NVIDIA GB300 NVL72: Built for the Age of AI Reasoning

The NVIDIA GB300 NVL72 features a fully liquid-cooled, rack-scale design that unifies 72 NVIDIA Blackwell Ultra GPUs and 36 Arm®-based NVIDIA Grace™ CPUs in a single platform optimized for test-time scaling inference.

AI factories powered with the GB300 NVL72 using NVIDIA Quantum-X800 InfiniBand or Spectrum™-X Ethernet paired with NVIDIA ConnectX®-8 SuperNIC™ provide a 50x higher output for reasoning model inference compared to the NVIDIA Hopper™ platform.

Fast Memory 60Xvs. NVIDIA HGX H100 | HBM Bandwidth 20Xvs. NVIDIA HGX H100 | Networking Bandwidth 18Xvs. NVIDIA HGX H100 |

| ||

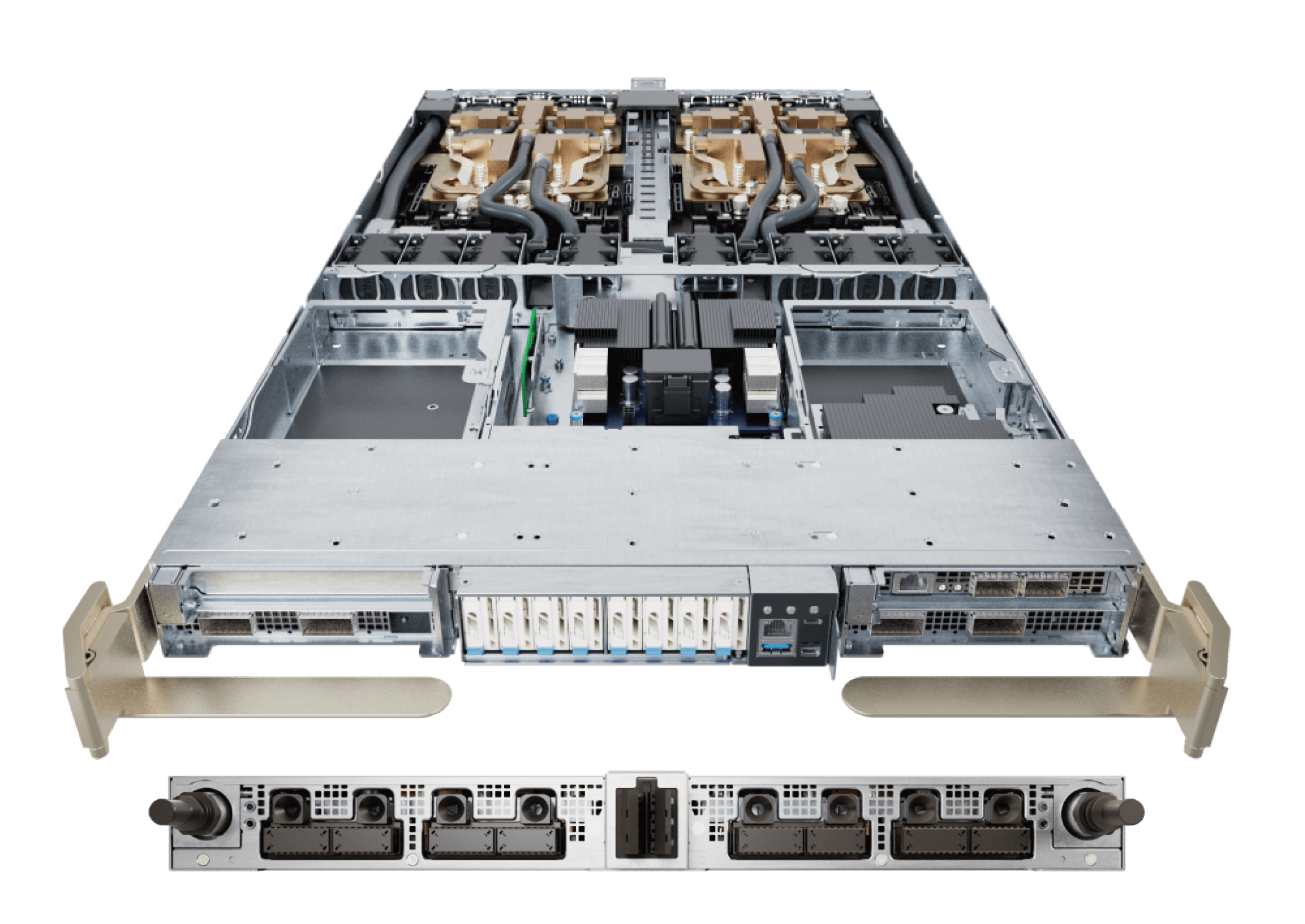

XN15-CB0-LA01 Compute Tray

| ||

NVIDIA HGX™ B300 / B200: Optimized for AI and High-Performance Computing

- Support for full-height add-in cards, accommodating DPUs and SuperNICs.

- A PCIe cage design and front-access motherboard/GPU trays for streamlined maintenance.

- Hot-swappable, fully redundant PSUs with multiple connector options for enhanced flexibility.

NVIDIA HGX™ B300

- 8 x NVIDIA Blackwell Ultra GPUs

- Up to 2.1TB of GPU memory

- 105 petaFLOPS training performance

- 144 petaFLOPS inference performance

- 1.8TB/s GPU-to-GPU bandwidth with NVIDIA NVLink™ and NVSwitch™

G894-SD3-AAX7

- NVIDIA HGX™ B300

- 8 x 800 Gb/s OSFP InfiniBand XDR or Dual 400 Gb/s Ethernet GPU networking ports via onboard NVIDIA ConnectX®-8 SuperNIC™

- Compatible with NVIDIA® BlueField®-3 DPUs and ConnectX®-7 NICs

- Compatible with NVIDIA® BlueField®-3 DPUs

- Dual Intel® Xeon® 6700/6500-Series Processors

- 2 x 10Gb/s LAN ports

- 8 x 2.5" Gen5 NVMe hot-swap bays

- 4 x FHHL PCIe Gen5 x16 slots

- 12 x 3000W 80 PLUS Titanium redundant power supplies

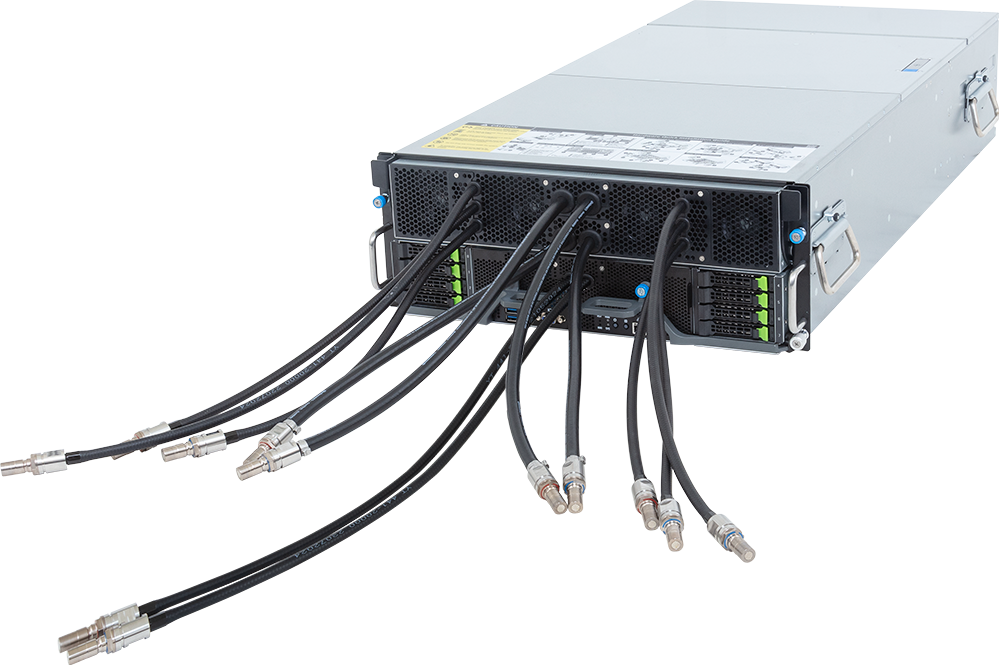

G4L3 4U HPC/AI Server

- Liquid-cooled NVIDIA HGX™ B200

- Dual 5th/4th Gen Intel® Xeon® Scalable or Dual AMD EPYC™ 9005/9004 Series CPUs

- Compatible with NVIDIA® BlueField®-3 DPUs and ConnectX®-7 NICs

- 2 x 10Gb/s LAN ports

- 8 x 2.5" Gen5 NVMe hot-swap bays

- 12 x FHHL PCIe Gen5 x16 slots

- 4+4 3000W 80 PLUS Titanium redundant PSUs