All Data Can Be AI Value

AI factory—a term used to describe IT infrastructure engineered to produce inventive, practical AI applications built from organizational data—is what propels industry leaders ahead of the competition. GIGABYTE, an end-to-end data center infrastructure and AI solution provider, can help enterprises of any size conceptualize and construct their AI factories. Our time-tested, world-renowned hardware and software portfolio can transform your data pipeline into a round-the-clock generator of smart value that enhances productivity and puts you on track to enjoy unprecedented AI success.

.

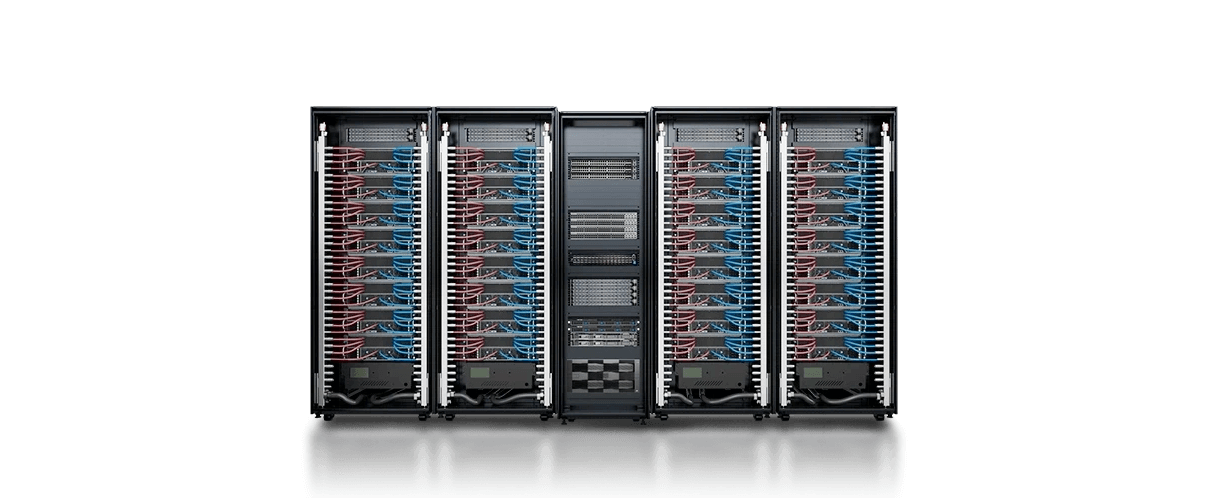

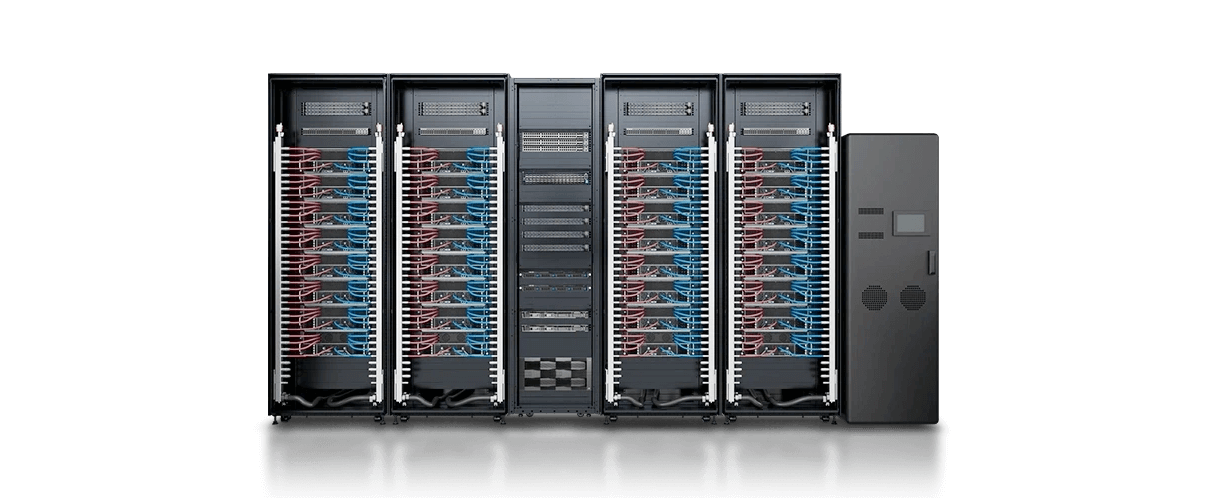

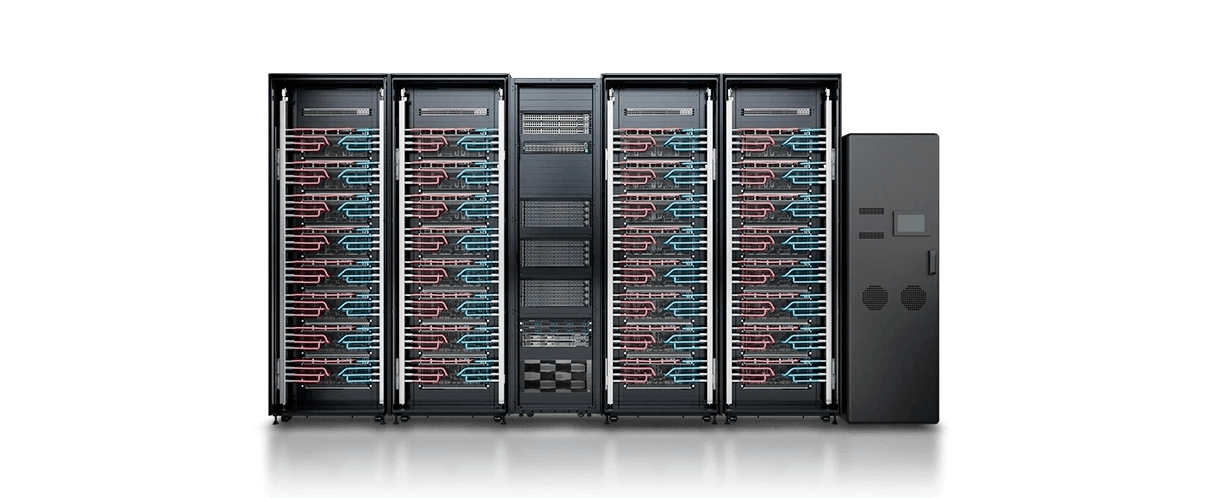

Develop Your AI Foundation with GIGAPOD

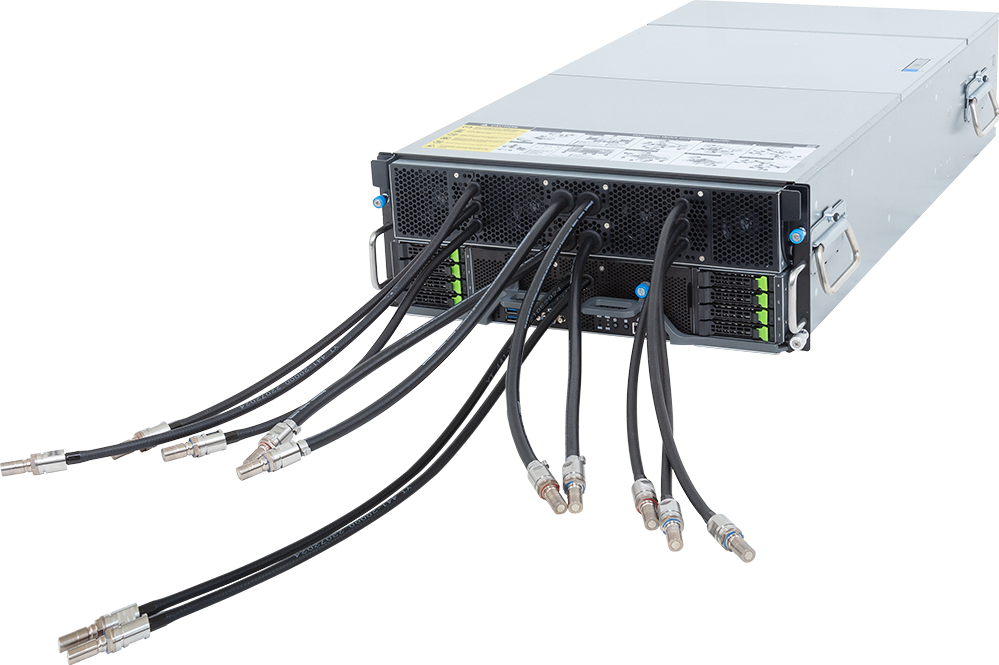

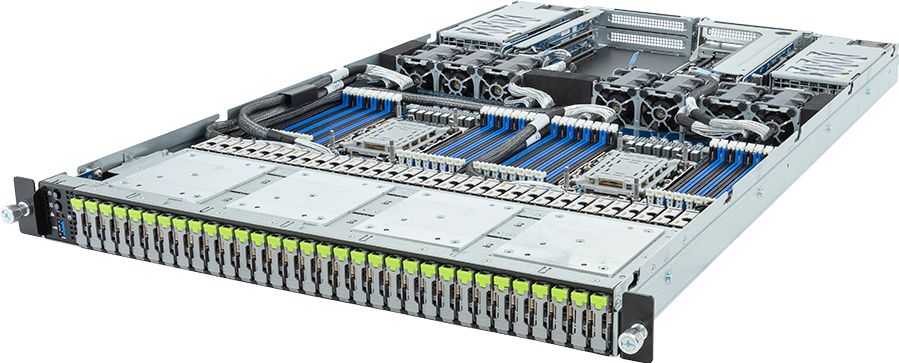

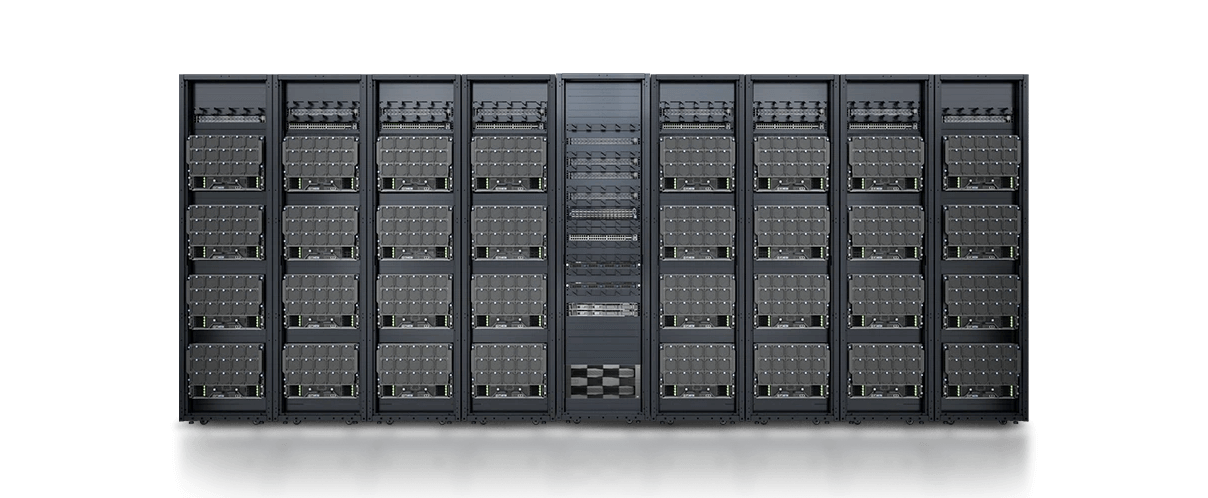

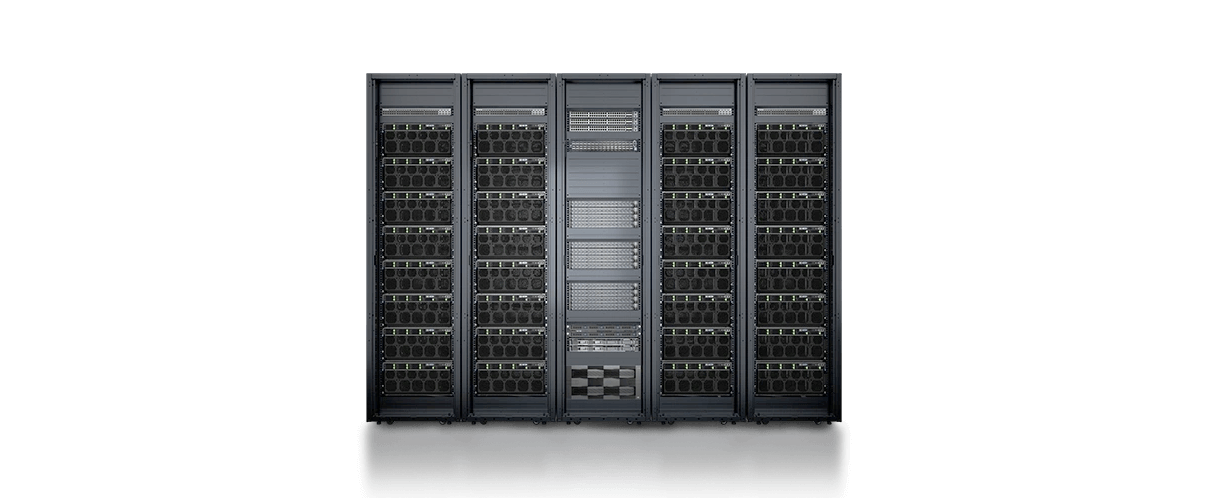

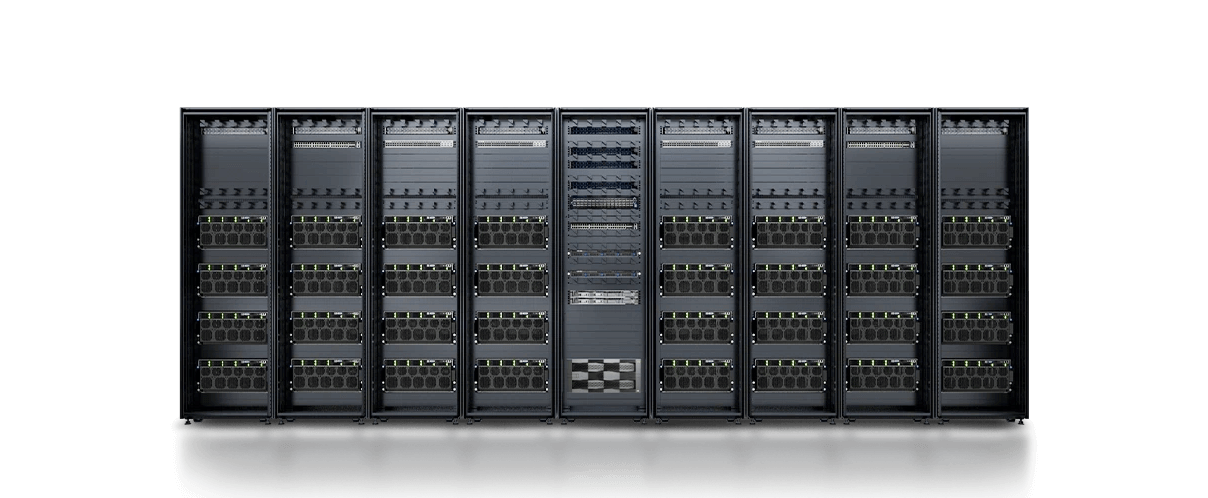

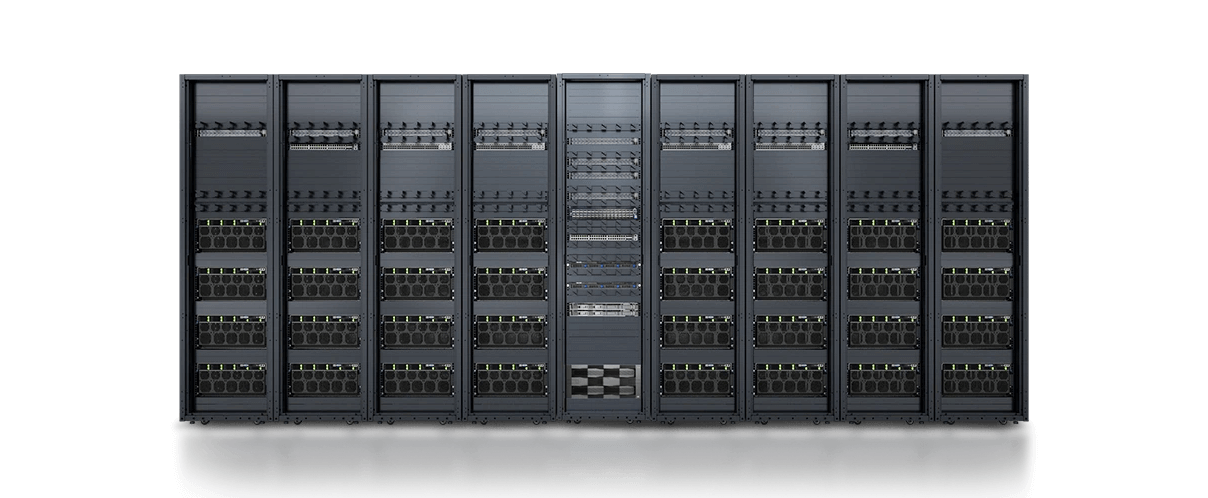

At the core of the AI factory, scalable supercomputing clusters convert big data into AI tokens at breakneck speed by utilizing GPU-centric configurations designed for deep learning and other approaches to AI training. GIGABYTE's GIGAPOD combines 256 state-of-the-art GPUs through blazing-fast interconnect technology to form a cohesive unit that serves as the building block of modern AI infrastructure. Clients can not only opt for AMD Instinct™, Intel® Gaudi®, or NVIDIA HGX™ Hopper/Blackwell GPU modules, but they can also choose between conventional air cooling and advanced liquid cooling to strike a perfect balance between investment and oomph. The GIGAPOD total solution is rounded out with specialized management servers for infrastructure oversight and control, as well as proprietary GPM software suite for DCIM, workload orchestration, MLOps, and more.

Air-CooledLiquid-Cooled

*Compute Racks + 1 Management Rack

GPUs Supported | GPU Server | GPU Servers | Power Consumption | No. of Racks | |

|---|---|---|---|---|---|

| NVIDIA HGX™ B300/B200/H200 AMD Instinct™ MI350X/MI325X/MI300X | 8U | 4 | 66kW | 8+1 (48U) |

| NVIDIA HGX™ B200 | 8OU | 4 | 55kW | 8+1 (44OU) |

| Intel® Gaudi® 3 | 8U | 4 | 62kW | 8+1 (48U) |

| NVIDIA HGX™ H200 AMD Instinct™ MI300X | 5U | 8 | 100kW | 4+1 (48U) |

| NVIDIA HGX™ H200 AMD Instinct™ MI300X | 5U | 4 | 50kW | 8+1 (42U) |

| NVIDIA HGX™ H200 AMD Instinct™ MI300X | 5U | 4 | 50kW | 8+1 (48U) |

.

Inference at Scale with NVIDIA GB300 NVL72

To successfully deploy your AI inventions, you need a superscale inference platform capable of handling a massive number of requests simultaneously. NVIDIA GB300 NVL72 features a fully liquid-cooled, rack-scale design that unifies 72 NVIDIA Blackwell Ultra GPUs and 36 Arm®-based NVIDIA Grace™ CPUs in a single platform optimized for test-time scaling inference.

AI factories powered with the GB300 NVL72 using NVIDIA Quantum-X800 InfiniBand or Spectrum™-X Ethernet paired with NVIDIA ConnectX®-8 SuperNIC™ provide a 50x higher output for reasoning model inference compared to the NVIDIA Hopper™ platform, making them the undisputed leader in AI inference.

1 Management Switches

- 2 x OOB management switches

- 1 x Optional OS switch

2 3 x 1U 33kW Power Shelves

3 10 x Compute Trays

- 1U XN15-CB0-LA01

4 9 x NVIDIA NVLinkTM Switch Trays

- 1U NVLink Switch tray

- 144 x NVLink ports per tray

- Fifth-generation NVLink with 1.8TB/s GPU-GPU interconnect

5 8 x Compute Trays

- 1U XN15-CB0-LA01

6 3 x 1U 33kW Power Shelves

7 Compatible with in-rack CDU or in-row CDU

Fast Memory

60X

vs. NVIDIA HGX H100

HBM Bandwidth

20X

vs. NVIDIA HGX H100

Networking Bandwidth

18X

vs. NVIDIA HGX H100

XN15-CB0-LA01 Compute Tray

- 2 x NVIDIA GB300 Grace™ Blackwell Ultra Superchip

- 4 x 279GB HBM3E GPU memory

- 2 x 480GB LPDDR5X CPU memory

- 8 x E1.S Gen5 NVMe drive bays

- 4 x NVIDIA ConnectX®-8 SuperNIC™ 800Gb/s OSFP ports

- 1 x NVIDIA® BlueField®-3 DPUs

Inference at Scale with NVIDIA GB300 NVL72

The next step after developing your AI models is to build applications and tools based on said models and deploy them throughout your organization, where hundreds or even thousands of users might access them at any given moment. You need a superscale inference platform capable of handling a multitude of requests simultaneously, so that your AI success can supercharge productivity. NVIDIA GB300 NVL72 features a fully liquid-cooled, rack-scale design that unifies 72 NVIDIA Blackwell Ultra GPUs and 36 Arm®-based NVIDIA Grace™ CPUs in a single platform optimized for test-time scaling inference. AI factories powered with the GB300 NVL72 using NVIDIA Quantum-X800 InfiniBand or Spectrum™-X Ethernet paired with NVIDIA ConnectX®-8 SuperNIC™ provide a 50x higher output for reasoning model inference compared to the NVIDIA Hopper™ platform, making them the undisputed leader in AI inference.

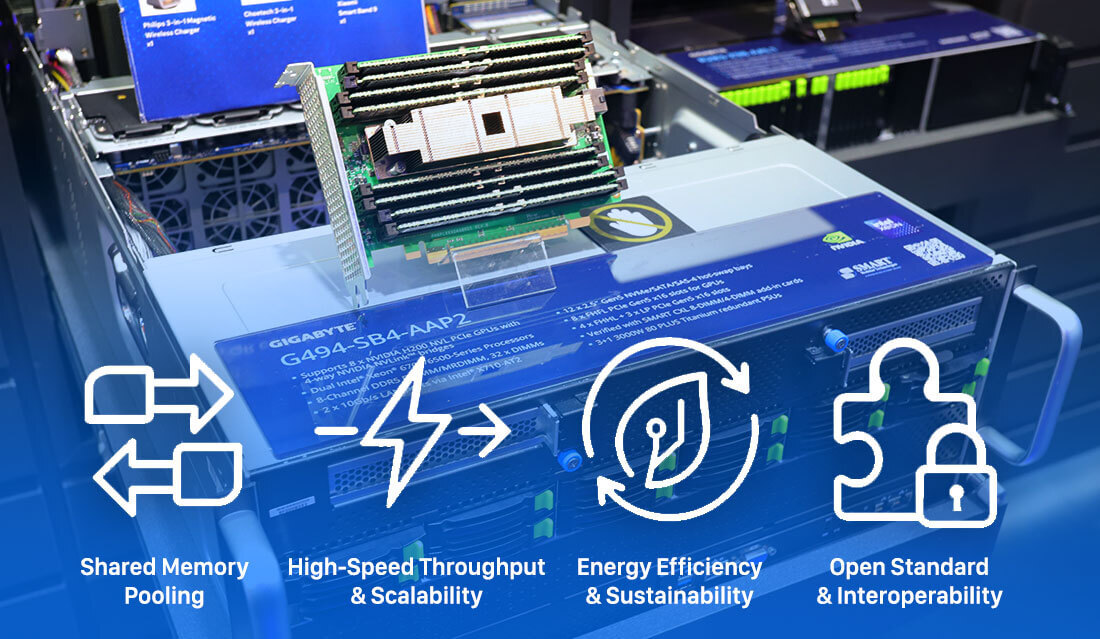

XL44-SX2-AAS1 with RTX PRO™ 6000 Blackwell Server Edition GPUs

- NVIDIA RTX PRO™ server with ConnectX®-8 SuperNIC switch

- Configured with 8 x NVIDIA RTX PRO™ 6000 Blackwell Server Edition GPUs

- Configured with 1 x NVIDIA® BlueField®-3 DPU

- Onboard 400Gb/s InfiniBand/Ethernet QSFP ports with PCIe Gen6 switching for peak GPU-to-GPU performance

- Dual Intel® Xeon® 6700/6500-Series Processors

- 8-Channel DDR5 RDIMM/MRDIMM, 32 x DIMMs

- 2 x 10Gb/s LAN ports via Intel® X710-AT2

- 8 x 2.5" Gen5 NVMe hot-swap bays

- 2 x M.2 slots with PCIe Gen4 x2 interface

- 3+1 3200W 80 PLUS Titanium redundant power supplies