Introduction

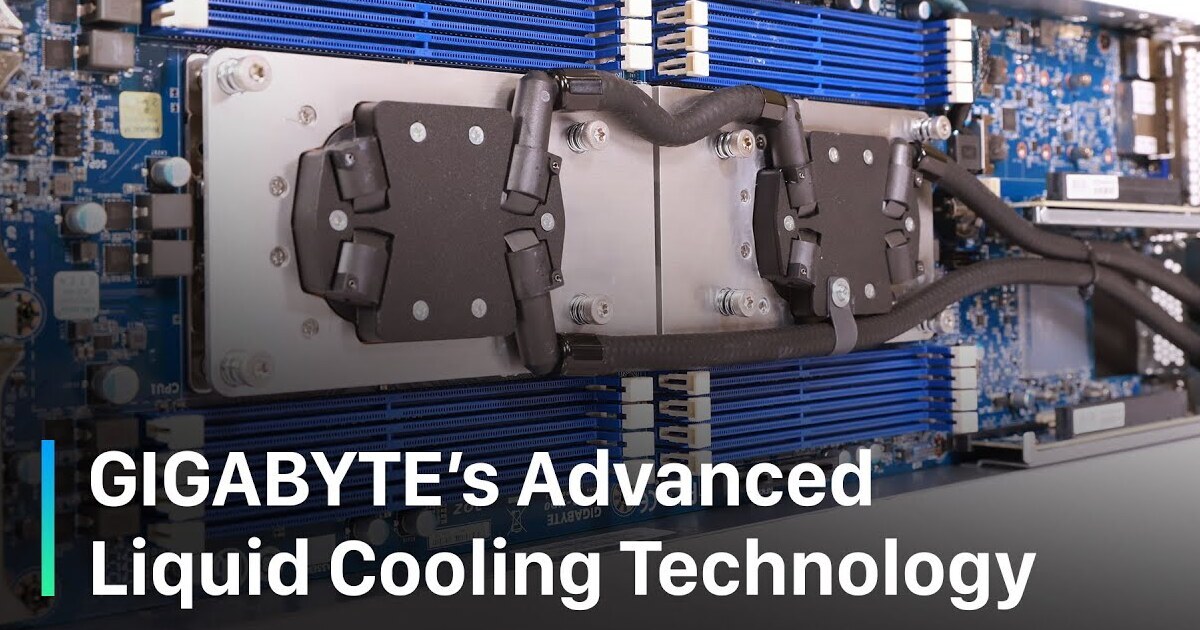

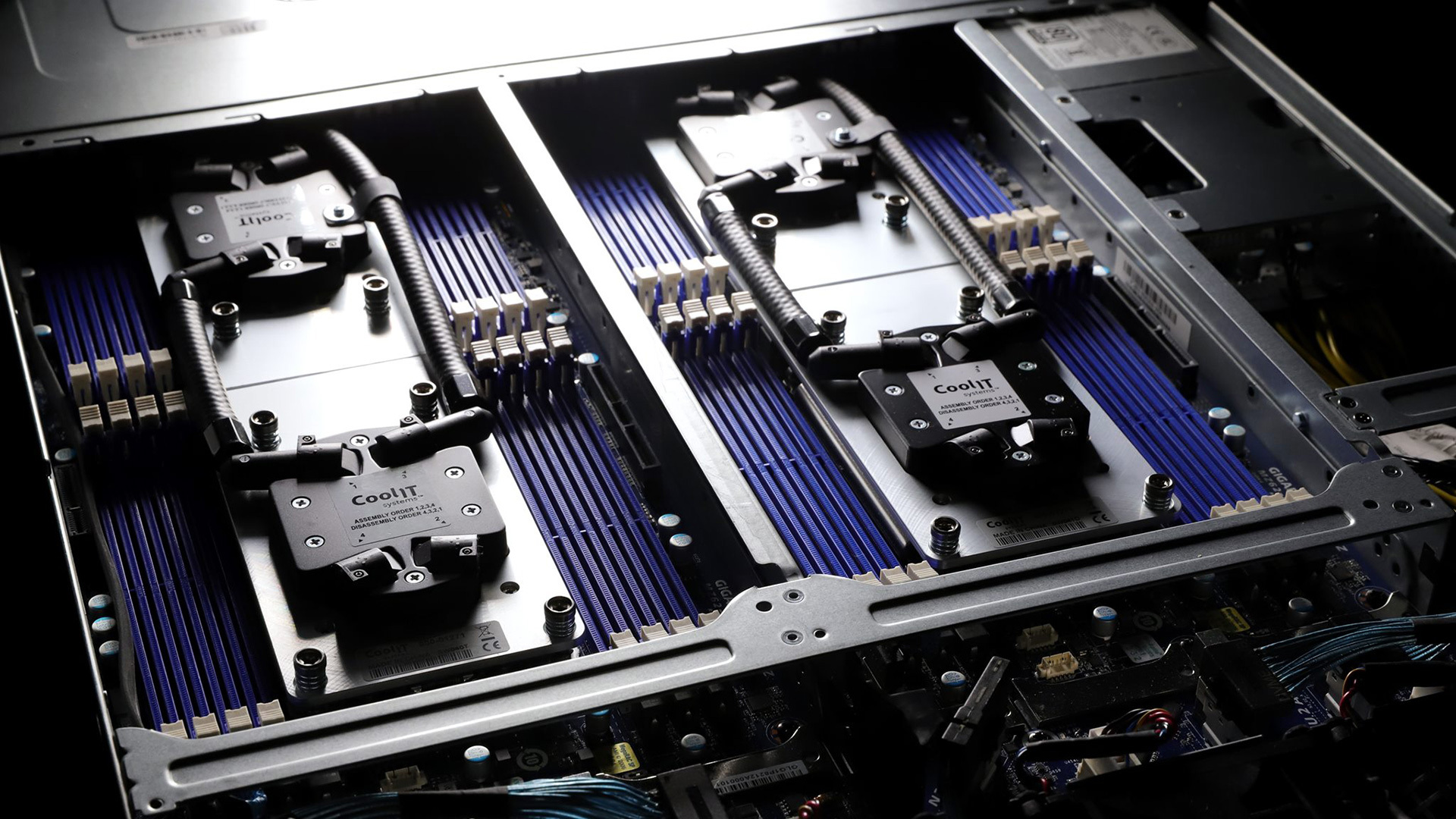

GIGABYTE has teamed up with leading cooling solutions provider CoolIT Systems to provide high-density factory-installed liquid cooled servers. Drop-in ready to integrate with liquid-to-air or liquid-to-liquid cooling systems, they are helping customers more efficiently manage the increasing heat loads of modern data center environments.

Success Stories

German Aerospace Center (DLR) HPC Cluster

GIGABYTE worked together with CoolIT and NEC to deploy a liquid cooled HPC cluster to the German National Aerospace Center (DLR) to conduct R&D in aviation, aerospace and transportation. The cluster uses GIGABYTE’s H261-Z60 comprising 2,300 GIGABYTE compute nodes with AMD EPYC™ 7601 processors, delivering the computing power of up to 147,200 cores, and is direct liquid cooled at a warm water inlet temperature of 35°C. The entire system was developed to work with high coolant temperatures to provide additional opportunities for heat re-use.

Cooling Challenges for Cloud & HPC & Cloud Computing

As the trend towards cloud computing is skyrocketing and demand is increasing for products and services enabled by Artificial Intelligence (AI), High Performance Computing (HPC) and the Internet of Things (IoT), the number of large, centralized data centers worldwide is also growing.

The demand for greater computing power delivered by these data centers is also increasing, using ever more powerful new chips from Intel, AMD and NVIDIA that generate more heat, and server systems that pack multiple numbers of these chips into a single chassis. Expensive real estate is also driving data centers to increase computing density per square foot, creating more of a cooling problem as hardware is jammed closer together with less space for air flow. What’s more, cooling fans also cause vibration which can result in damage to other components within the server and create dangerous noise levels for maintenance workers.

It is estimated that data centers now consume more than 2% of world’s electricity: around 272 billion kWh. And a lot of this energy consumption is spent not on powering the computer hardware itself, but on facility costs – mainly air conditioning. Traditional air cooling in data centers is quite inefficient, with over 400 trillion BTUs of heat wasted every year and generates a massive carbon footprint.

Warm liquid cooling is a much more efficient way of transferring heat away from electrical components than air requiring far less energy for cooling. In addition, since air cooling equipment within a server such as large fans and heat sinks is not required, liquid cooling systems can support a much greater density of CPU and GPU components in each space, delivering greater compute performance per square foot.

The popularization and standardization of liquid cooling technology means that it is now a feasible choice for mid or small sized data centers and for smaller HPC cluster deployments.

The demand for greater computing power delivered by these data centers is also increasing, using ever more powerful new chips from Intel, AMD and NVIDIA that generate more heat, and server systems that pack multiple numbers of these chips into a single chassis. Expensive real estate is also driving data centers to increase computing density per square foot, creating more of a cooling problem as hardware is jammed closer together with less space for air flow. What’s more, cooling fans also cause vibration which can result in damage to other components within the server and create dangerous noise levels for maintenance workers.

It is estimated that data centers now consume more than 2% of world’s electricity: around 272 billion kWh. And a lot of this energy consumption is spent not on powering the computer hardware itself, but on facility costs – mainly air conditioning. Traditional air cooling in data centers is quite inefficient, with over 400 trillion BTUs of heat wasted every year and generates a massive carbon footprint.

Warm liquid cooling is a much more efficient way of transferring heat away from electrical components than air requiring far less energy for cooling. In addition, since air cooling equipment within a server such as large fans and heat sinks is not required, liquid cooling systems can support a much greater density of CPU and GPU components in each space, delivering greater compute performance per square foot.

The popularization and standardization of liquid cooling technology means that it is now a feasible choice for mid or small sized data centers and for smaller HPC cluster deployments.

The Solution: GIGABYTE High Density Servers with CoolIT DLC

GIGABYTE has partnered with liquid cooling technology leader CoolIT to offer their DLC (Direct Liquid Cooling) system as a standard option for our H262 Series High Density Server Systems.

Ultra-Dense, Ultra Powerful Computing Performance

Ready to Integrate Modular Building Blocks

Maximum Energy Efficiency

Ultra-Dense, Ultra-Powerful Computing Performance

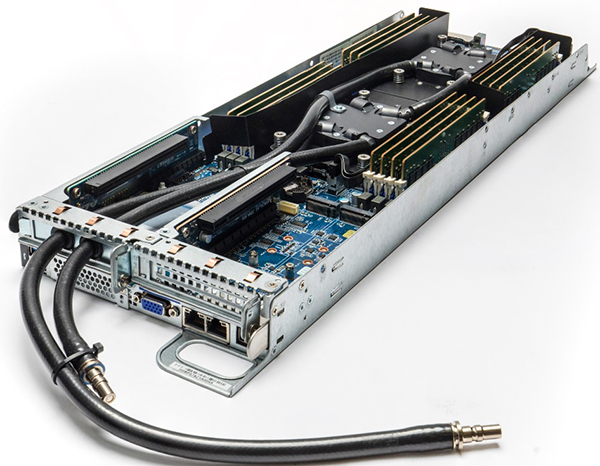

GIGABYTE’s AMD EPYC H262 Series servers are currently one of the most dense and powerful server platforms available on the market, and only liquid cooling enables them to reach their full performance possibilities. Each 2U server features four dual socket compute nodes that can support extremely powerful processors such as the AMD EPYC 7H12, a 64 core 128 thread monster with a 2.6GHz base clock that would previously be impossible to integrate in this kind of high-density system using traditional air cooling. Using the 7H12, a single 2U server can support up to 512 cores, 1024 threads of computing power as well as over 8TB (64 x 128GB LRDIMM) of 3200MHz memory.

One node with coldplate loop

Ready to Integrate Modular Building Blocks

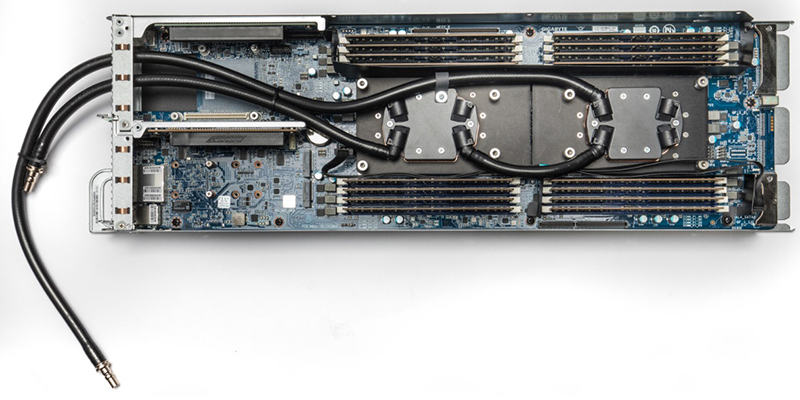

CoolIT’s DLC platform is a modular, rack-based cooling solution that allows for dramatic increases in rack densities, component performance and power efficiencies. DLC uses warm liquid rather than cold air to dissipate heat from computer and server components. By capturing component heat in a liquid path, DLC allows for higher component performance and reliability, higher densities and decreased data center operating expenses through a decrease, or elimination of chillers and CRAC (Computer Room Air Conditioning).

CoolIT’s DLC modular building blocks

CoolIT’s DLC System consists of the following three building blocks:

1. Passive Cold Plate Loops

Factory installed on the GIGABYTE server node CPU and other components (memory modules, networking card) to replace heatsinks. CoolIT’s passive coldplate loop technology ensures there is no separate pumping mechanism within the coldplate itself that could potentially fail.

Factory installed on the GIGABYTE server node CPU and other components (memory modules, networking card) to replace heatsinks. CoolIT’s passive coldplate loop technology ensures there is no separate pumping mechanism within the coldplate itself that could potentially fail.

Passive coldplate loops

2. Rack Manifold

Built to fit any rack, the CoolIT Rack Manifold connects each liquid cooled server node to rack based CDU (Coolant Distribution Units). It features a reliable stainless steel design and 100% dry-break quick disconnects for quick maintenance of any server node, which are color coded red (hot) and blue (cold) to easily match with each cold plate loop. The rack manifold is easy to install and occupies only a single PDU space on each side of the rack. It can also be customized for different racks or number of connections.

Built to fit any rack, the CoolIT Rack Manifold connects each liquid cooled server node to rack based CDU (Coolant Distribution Units). It features a reliable stainless steel design and 100% dry-break quick disconnects for quick maintenance of any server node, which are color coded red (hot) and blue (cold) to easily match with each cold plate loop. The rack manifold is easy to install and occupies only a single PDU space on each side of the rack. It can also be customized for different racks or number of connections.

Rack manifolds

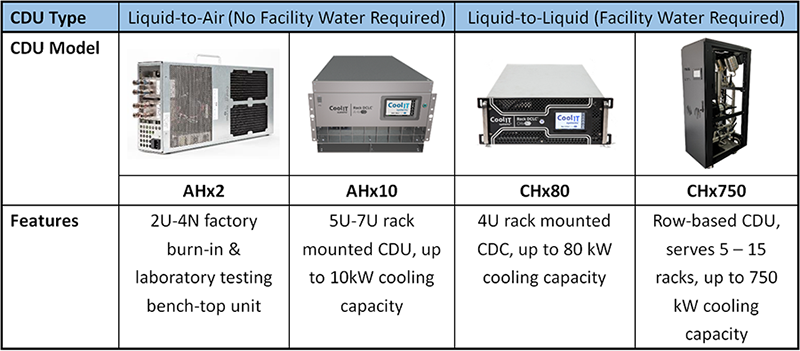

3. Coolant Distribution Unit (CDU)

CoolIT’s CDUs connect to the rack manifold and are available in both liquid-to-air (for traditional air-cooled data centers without existing liquid cooling infrastructure) or liquid-to-liquid designs (for data centers that feature liquid cooling infrastructure). They provide an intelligent control system that manages supply temperature and flow of coolant to servers, easy rack installation and simple connection and disconnection with 100% dry-break quick disconnects.

CoolIT’s CDUs connect to the rack manifold and are available in both liquid-to-air (for traditional air-cooled data centers without existing liquid cooling infrastructure) or liquid-to-liquid designs (for data centers that feature liquid cooling infrastructure). They provide an intelligent control system that manages supply temperature and flow of coolant to servers, easy rack installation and simple connection and disconnection with 100% dry-break quick disconnects.

Coolant distribution units

Maximum Energy Efficiency

Direct Liquid Cooling (DLC) uses the exceptional thermal conductivity of liquid to provide dense, concentrated cooling to targeted areas. By using DLC and warm liquid (ASHRAE W2 to W5, up to 45°C), the dependence on fans and expensive air handling systems is drastically reduced. This results in overall decreased power use, significantly reduced carbon footprint and greater data center PUE (Power Usage Efficiency).

GIGABYTE Products

Currently GIGABYTE offers the following High Density Servers factory installed with CoolIT DLC technology. Additional models may be available on request.

![[Success Case] GIGABYTE Servers Become Part of the German Aerospace Center’s Data Center](https://static.gigabyte.com/StaticFile/Image/Global/1680a32364bc4c5491ac4b1b728bf6dc/Article/95)

![[Tech Guide] How to Pick a Cooling Solution for Your Servers? A Tech Guide by GIGABYTE](https://static.gigabyte.com/StaticFile/Image/Global/0b8748a3d52a4840f61d449132635946/Article/162)