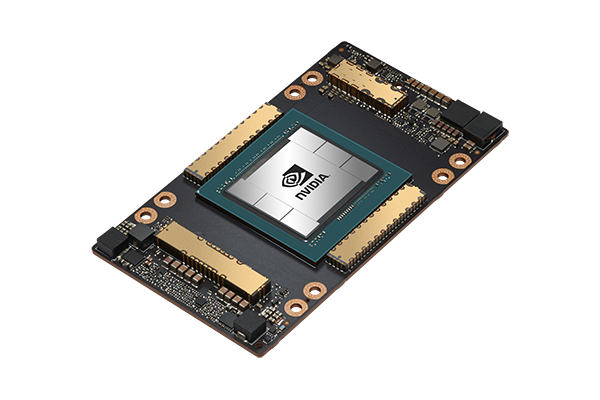

NVIDIA A100 Tensor Core GPU

Unprecedented Acceleration at Every Scale

The Most Powerful Compute Platform for Every Workload

The NVIDIA A100 Tensor Core GPU delivers unprecedented

acceleration—at every scale—to power the world's highest performing elastic data centers for AI, data analytics, and high-performance computing (HPC) applications. As the engine of

the NVIDIA data center platform, A100 provides up to 20X higher

performance over the prior NVIDIA Volta™ generation. A100 can

efficiently scale up or be partitioned into seven isolated GPU

instances with Multi-Instance GPU (MIG), providing a unified

platform that enables elastic data centers to dynamically adjust

to shifting workload demands.

NVIDIA A100 Tensor Core technology supports a broad range

of math precisions, providing a single accelerator for every

workload. The latest generation A100 80GB doubles GPU memory

and debuts the world’s fastest memory bandwidth at 2 terabytes

per second (TB/s), speeding time to solution for the largest

models and most massive datasets.

A100 is part of the complete NVIDIA data center solution that

incorporates building blocks across hardware, networking,

software, libraries, and optimized AI models and applications

from the NVIDIA NGC™ catalog. Representing the most powerful

end-to-end AI and HPC platform for data centers, it allows

researchers to deliver real-world results and deploy solutions

into production at scale.

Applications

HPC & AI

HPC and AI go hand in hand. HPC has the compute infrastructure, storage, and networking that lays the groundwork for AI training with accurate and reliable models. Additionally, there are a lot of precision choices for either HPC or AI workloads.

Engineering & Sciences

Big data and computational simulations are common needs of engineers and scientists. High parallel processing, low latency, and high bandwidth help create an environment for server virtualization.

Cloud

On-premise HPC continues to grow, yet cloud HPC is growing at a faster rate. By moving to the cloud companies can quickly and easily utilize compute resources by demand. Cloud computing can use the latest and greatest technology.

NVIDIA A100 - Ingenious Technology

The NVIDIA A100 GPU is engineered to provide as much AI and HPC computing power possible with the new NVIDIA Ampere architecture and optimizations. Built on TSMC 7nm N7 FinFET, A100 has improved transistor density, performance, and power efficiency compared to prior 12nm technology. With the new Multi-Instance GPU (MIG) capabilities in Ampere GPUs, A100 can create the best virtualized GPU environments possible for Cloud Service Providers.

- NVIDIA Ampere Architecture:

Whether using MIG to partition an A100 GPU into smaller instances or NVLink to connect multiple GPUs to speed large-scale workloads, A100 can readily handle different-sized acceleration needs, from the smallest job to the biggest multi-node workload. A100's versatility means IT managers can maximize the utility of every GPU in their data center, around the clock. - Third Generation Tensor Cores:

NVIDIA A100 delivers 312 teraFLOPS (TFLOPS) of deep learning performance. That's 20X the Tensor floating-point operations per second (FLOPS) for deep learning training and 20X the Tensor tera operations per second (TOPS) for deep learning inference compared to NVIDIA Volta GPUs. - Next Generation NVLink:

NVIDIA NVLink in A100 delivers 2X higher throughput compared to the previous generation. When combined with NVIDIA NVSwitch™, up to 16 A100 GPUs can be interconnected at up to 600 gigabytes per second (GB/sec), unleashing the highest application performance possible on a single server. NVLink is available in A100 SXM GPUs via HGX A100 server boards and in PCIe GPUs via an NVLink Bridge for up to 2 GPUs. - Multi-Instance GPU (MIG):

An A100 GPU can be partitioned into as many as seven GPU instances, fully isolated at the hardware level with their own high-bandwidth memory, cache, and compute cores. MIG gives developers access to breakthrough acceleration for all their applications, and IT administrators can offer right-sized GPU acceleration for every job, optimizing utilization and expanding access to every user and application. - High-Bandwidth Memory (HBM2E):

With up to 80 gigabytes of HBM2e, A100 delivers the world's fastest GPU memory bandwidth of over 2TB/s, as well as a dynamic random-access memory (DRAM) utilization efficiency of 95%. A100 delivers 1.7X higher memory bandwidth over the previous generation. - Structural Sparsity:

AI networks have millions to billions of parameters. Not all of these parameters are needed for accurate predictions, and some can be converted to zeros, making the models “sparse” without compromising accuracy. Tensor Cores in A100 can provide up to 2X higher performance for sparse models. While the sparsity feature more readily benefits AI inference, it can also improve the performance of model training.

NVIDIA A100 - Ingenious Technology

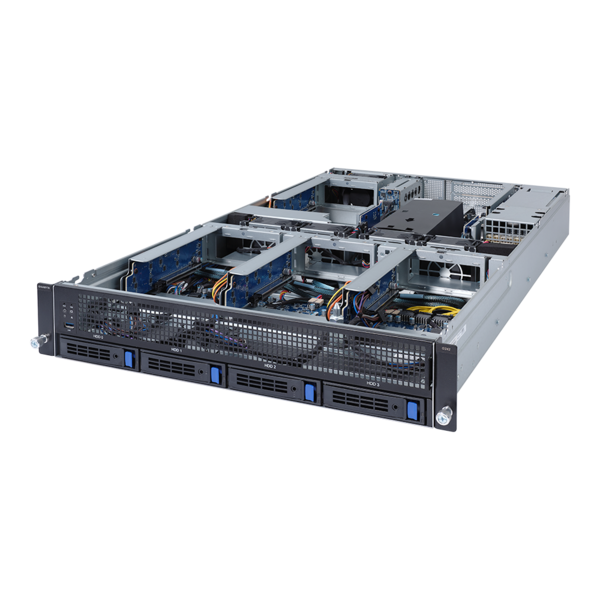

Server for NVIDIA HGX A100 8-GPU Solution

Server for NVIDIA A100 PCIe GPU- 10 GPU System

High-performance

A100 achieves top tier performance in a broad range of math precisions, with the SXM module doubling that of PCIe GPU in TF32, FP16, and BFLOAT16.

Scalability

Combining NVLink with high speed connections it is possible to create large compute clusters as A100 can scale to thousands of A100s using NVSwitch.

Fast Throughput

Fast GPU-GPU and CPU-GPU communication achieved with A100 using NVLink, NVSwitch, and InfiniBand. A100 hits up to 2,039GB/s throughput.

High Utilization

Multi-instance GPU technology allows for a single A100 80GB GPU to partition into seven MIGs for consistent and predictable utilization of resources.

| A100 80GB PCIe | A100 40GB SXM | A100 80GB SXM | |

|---|---|---|---|

| FP64 | 9.7 TFLOPS | ||

| FP64 Tensor Core | 19.5 TFLOPS | ||

| FP32 | 19.5 TFLOPS | ||

| Tensor Float 32 (TF32) | 156 TFLOPS | 312 TFLOPS* | ||

| BFLOAT16 Tensor Core | 312 TFLOPS | 624 TFLOPS* | ||

| FP16 Tensor Core | 312 TFLOPS | 624 TFLOPS* | ||

| INT8 Tensor Core | 624 TOPS | 1248 TOPS* | ||

| GPU Memory | 80GB HBM2e | 40GB HBM2 | 80GB HBM2e |

| GPU Memory Bandwidth | 1,935GB/s | 1,555GB/s | 2,039GB/s |

| Max Thermal Design Power (TDP) | 300W | 400W | 400W |

| Multi-Instance GPU | Up to 7 MIGs @ 10GB | Up to 7 MIGs @ 5GB | Up to 7 MIGs @ 10GB |

| Form Factor | PCIe | SXM | |

| Interconnect | NVIDIA® NVLink® Bridge for 2 GPUs: 600GB/s ** PCIe Gen4: 64GB/s |

NVLink: 600GB/s PCIe Gen4: 64GB/s |

|

| Server Options | Partner and NVIDIA-Certified Systems™ with 1-8 GPUs | NVIDIA HGX™ A100-Partner and NVIDIA-Certified Systems with 4,8, or 16 GPUs NVIDIA DGX™ A100 with 8 GPUs |

|

* With sparsity

** SXM4 GPUs via HGX A100 server boards; PCIe GPUs via NVLink Bridge for up to two GPUs

NVIDIA-Certified Systems™

Complex AI workloads, including clusters, are becoming more common and system integrators and IT staff must quickly adapt to the changing technology. In order to improve system compatibility and confidence, NVIDIA has introduced the NVIDIA-Certified Systems program that validates servers based on hardware and NVIDIA NGC Catalog's software. Currently the NVIDIA-Certified Systems focus on NVIDIA Ampere architecture and NVIDIA Mellanox network adapters, but the program will expand. As well, customers that are familiar with NVIDIA NGC Support Services can use the services for NVIDIA-Certified.

Related Products

5/6

HPC/AI Server - AMD EPYC™ 7003/7002 - 2U UP 4 x PCIe Gen4 GPUs

| Application:

AI

,

AI Training

,

AI Inference

&

Visual Computing

6/6

HPC/AI Arm Server - Ampere® Altra® Max - 2U UP 4 x PCIe Gen4 GPUs

| Application:

AI

,

AI Training

,

AI Inference

,

Visual Computing

&

HPC